Merge branch '3.0' into fix/TS-4421-3.0

This commit is contained in:

commit

a083f45ac1

|

|

@ -2,7 +2,7 @@

|

|||

IF (DEFINED VERNUMBER)

|

||||

SET(TD_VER_NUMBER ${VERNUMBER})

|

||||

ELSE ()

|

||||

SET(TD_VER_NUMBER "3.2.3.0.alpha")

|

||||

SET(TD_VER_NUMBER "3.2.4.0.alpha")

|

||||

ENDIF ()

|

||||

|

||||

IF (DEFINED VERCOMPATIBLE)

|

||||

|

|

|

|||

|

|

@ -842,12 +842,12 @@ consumer = Consumer({"group.id": "local", "td.connect.ip": "127.0.0.1"})

|

|||

|

||||

In addition to native connections, the client library also supports subscriptions via websockets.

|

||||

|

||||

The syntax for creating a consumer is "consumer = consumer = Consumer(conf=configs)". You need to specify that the `td.connect.websocket.scheme` parameter is set to "ws" in the configuration. For more subscription api parameters, please refer to [Data Subscription](../../develop/tmq/#create-a-consumer).

|

||||

The syntax for creating a consumer is "consumer = Consumer(conf=configs)". You need to specify that the `td.connect.websocket.scheme` parameter is set to "ws" in the configuration. For more subscription api parameters, please refer to [Data Subscription](../../develop/tmq/#create-a-consumer).

|

||||

|

||||

```python

|

||||

import taosws

|

||||

|

||||

consumer = taosws.(conf={"group.id": "local", "td.connect.websocket.scheme": "ws"})

|

||||

consumer = taosws.Consumer(conf={"group.id": "local", "td.connect.websocket.scheme": "ws"})

|

||||

```

|

||||

|

||||

</TabItem>

|

||||

|

|

@ -887,13 +887,13 @@ The `poll` function is used to consume data in tmq. The parameter of the `poll`

|

|||

|

||||

```python

|

||||

while True:

|

||||

res = consumer.poll(1)

|

||||

if not res:

|

||||

message = consumer.poll(1)

|

||||

if not message:

|

||||

continue

|

||||

err = res.error()

|

||||

err = message.error()

|

||||

if err is not None:

|

||||

raise err

|

||||

val = res.value()

|

||||

val = message.value()

|

||||

|

||||

for block in val:

|

||||

print(block.fetchall())

|

||||

|

|

@ -902,16 +902,14 @@ while True:

|

|||

</TabItem>

|

||||

<TabItem value="websocket" label="WebSocket connection">

|

||||

|

||||

The `poll` function is used to consume data in tmq. The parameter of the `poll` function is a value of type float representing the timeout in seconds. It returns a `Message` before timing out, or `None` on timing out. You have to handle error messages in response data.

|

||||

The `poll` function is used to consume data in tmq. The parameter of the `poll` function is a value of type float representing the timeout in seconds. It returns a `Message` before timing out, or `None` on timing out.

|

||||

|

||||

```python

|

||||

while True:

|

||||

res = consumer.poll(timeout=1.0)

|

||||

if not res:

|

||||

message = consumer.poll(1)

|

||||

if not message:

|

||||

continue

|

||||

err = res.error()

|

||||

if err is not None:

|

||||

raise err

|

||||

|

||||

for block in message:

|

||||

for row in block:

|

||||

print(row)

|

||||

|

|

|

|||

|

|

@ -41,16 +41,28 @@ window_clause: {

|

|||

SESSION(ts_col, tol_val)

|

||||

| STATE_WINDOW(col)

|

||||

| INTERVAL(interval_val [, interval_offset]) [SLIDING (sliding_val)]

|

||||

| EVENT_WINDOW START WITH start_trigger_condition END WITH end_trigger_condition

|

||||

| COUNT_WINDOW(count_val[, sliding_val])

|

||||

}

|

||||

```

|

||||

|

||||

`SESSION` indicates a session window, and `tol_val` indicates the maximum range of the time interval. If the time interval between two continuous rows are within the time interval specified by `tol_val` they belong to the same session window; otherwise a new session window is started automatically.

|

||||

|

||||

`EVENT_WINDOW` is determined according to the window start condition and the window close condition. The window is started when `start_trigger_condition` is evaluated to true, the window is closed when `end_trigger_condition` is evaluated to true. `start_trigger_condition` and `end_trigger_condition` can be any conditional expressions supported by TDengine and can include multiple columns.

|

||||

|

||||

`COUNT_WINDOW` is a counting window that is divided by a fixed number of data rows.`count_val`: A constant, which is a positive integer and must be greater than or equal to 2. The maximum value is 2147483648. `count_val` represents the maximum number of data rows contained in each `COUNT_WINDOW`. When the total number of data rows cannot be divided by `count_val`, the number of rows in the last window will be less than `count_val`. `sliding_val`: is a constant that represents the number of window slides, similar to `SLIDING` in `INTERVAL`.

|

||||

|

||||

For example, the following SQL statement creates a stream and automatically creates a supertable named `avg_vol`. The stream has a 1 minute time window that slides forward in 30 second intervals to calculate the average voltage of the meters supertable.

|

||||

|

||||

```sql

|

||||

CREATE STREAM avg_vol_s INTO avg_vol AS

|

||||

SELECT _wstart, count(*), avg(voltage) FROM meters PARTITION BY tbname INTERVAL(1m) SLIDING(30s);

|

||||

|

||||

CREATE STREAM streams0 INTO streamt0 AS

|

||||

SELECT _wstart, count(*), avg(voltage) from meters PARTITION BY tbname EVENT_WINDOW START WITH voltage < 0 END WITH voltage > 9;

|

||||

|

||||

CREATE STREAM streams1 IGNORE EXPIRED 1 WATERMARK 100s INTO streamt1 AS

|

||||

SELECT _wstart, count(*), avg(voltage) from meters PARTITION BY tbname COUNT_WINDOW(10);

|

||||

```

|

||||

|

||||

## Partitions of Stream

|

||||

|

|

|

|||

|

|

@ -10,6 +10,10 @@ For TDengine 2.x installation packages by version, please visit [here](https://t

|

|||

|

||||

import Release from "/components/ReleaseV3";

|

||||

|

||||

## 3.2.3.0

|

||||

|

||||

<Release type="tdengine" version="3.2.3.0" />

|

||||

|

||||

## 3.2.2.0

|

||||

|

||||

<Release type="tdengine" version="3.2.2.0" />

|

||||

|

|

|

|||

|

|

@ -856,7 +856,7 @@ taosws `Consumer` API 提供了基于 Websocket 订阅 TMQ 数据的 API。创

|

|||

```python

|

||||

import taosws

|

||||

|

||||

consumer = taosws.(conf={"group.id": "local", "td.connect.websocket.scheme": "ws"})

|

||||

consumer = taosws.Consumer(conf={"group.id": "local", "td.connect.websocket.scheme": "ws"})

|

||||

```

|

||||

|

||||

</TabItem>

|

||||

|

|

@ -896,13 +896,13 @@ Consumer API 的 `poll` 方法用于消费数据,`poll` 方法接收一个 flo

|

|||

|

||||

```python

|

||||

while True:

|

||||

res = consumer.poll(1)

|

||||

if not res:

|

||||

message = consumer.poll(1)

|

||||

if not message:

|

||||

continue

|

||||

err = res.error()

|

||||

err = message.error()

|

||||

if err is not None:

|

||||

raise err

|

||||

val = res.value()

|

||||

val = message.value()

|

||||

|

||||

for block in val:

|

||||

print(block.fetchall())

|

||||

|

|

@ -911,16 +911,14 @@ while True:

|

|||

</TabItem>

|

||||

<TabItem value="websocket" label="WebSocket 连接">

|

||||

|

||||

Consumer API 的 `poll` 方法用于消费数据,`poll` 方法接收一个 float 类型的超时时间,超时时间单位为秒(s),`poll` 方法在超时之前返回一条 Message 类型的数据或超时返回 `None`。消费者必须通过 Message 的 `error()` 方法校验返回数据的 error 信息。

|

||||

Consumer API 的 `poll` 方法用于消费数据,`poll` 方法接收一个 float 类型的超时时间,超时时间单位为秒(s),`poll` 方法在超时之前返回一条 Message 类型的数据或超时返回 `None`。

|

||||

|

||||

```python

|

||||

while True:

|

||||

res = consumer.poll(timeout=1.0)

|

||||

if not res:

|

||||

message = consumer.poll(1)

|

||||

if not message:

|

||||

continue

|

||||

err = res.error()

|

||||

if err is not None:

|

||||

raise err

|

||||

|

||||

for block in message:

|

||||

for row in block:

|

||||

print(row)

|

||||

|

|

|

|||

|

|

@ -49,6 +49,7 @@ window_clause: {

|

|||

| STATE_WINDOW(col)

|

||||

| INTERVAL(interval_val [, interval_offset]) [SLIDING (sliding_val)] [FILL(fill_mod_and_val)]

|

||||

| EVENT_WINDOW START WITH start_trigger_condition END WITH end_trigger_condition

|

||||

| COUNT_WINDOW(count_val[, sliding_val])

|

||||

}

|

||||

```

|

||||

|

||||

|

|

@ -180,6 +181,19 @@ select _wstart, _wend, count(*) from t event_window start with c1 > 0 end with c

|

|||

|

||||

|

||||

|

||||

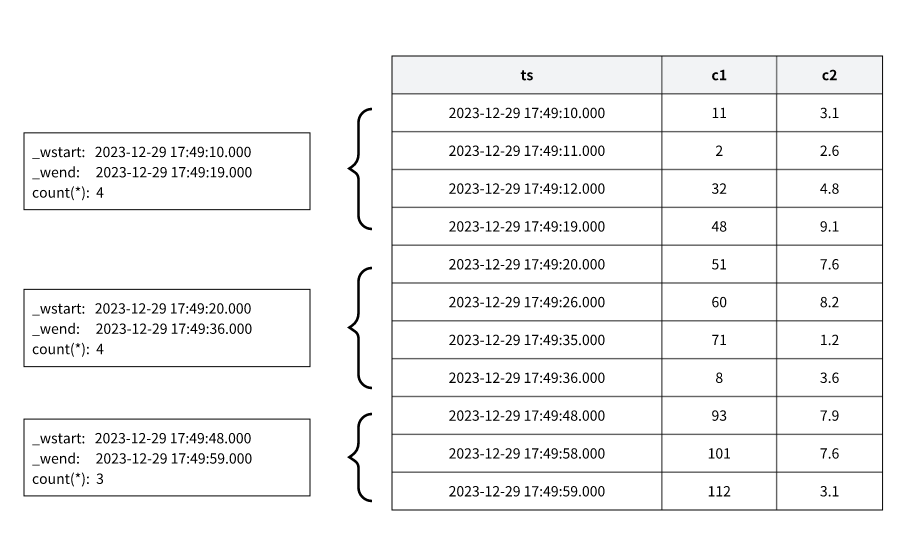

### 计数窗口

|

||||

|

||||

计数窗口按固定的数据行数来划分窗口。默认将数据按时间戳排序,再按照count_val的值,将数据划分为多个窗口,然后做聚合计算。count_val表示每个count window包含的最大数据行数,总数据行数不能整除count_val时,最后一个窗口的行数会小于count_val。sliding_val是常量,表示窗口滑动的数量,类似于 interval的SLIDING。

|

||||

|

||||

以下面的 SQL 语句为例,计数窗口切分如图所示:

|

||||

```sql

|

||||

select _wstart, _wend, count(*) from t count_window(4);

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 时间戳伪列

|

||||

|

||||

窗口聚合查询结果中,如果 SQL 语句中没有指定输出查询结果中的时间戳列,那么最终结果中不会自动包含窗口的时间列信息。如果需要在结果中输出聚合结果所对应的时间窗口信息,需要在 SELECT 子句中使用时间戳相关的伪列: 时间窗口起始时间 (\_WSTART), 时间窗口结束时间 (\_WEND), 时间窗口持续时间 (\_WDURATION), 以及查询整体窗口相关的伪列: 查询窗口起始时间(\_QSTART) 和查询窗口结束时间(\_QEND)。需要注意的是时间窗口起始时间和结束时间均是闭区间,时间窗口持续时间是数据当前时间分辨率下的数值。例如,如果当前数据库的时间分辨率是毫秒,那么结果中 500 就表示当前时间窗口的持续时间是 500毫秒 (500 ms)。

|

||||

|

|

|

|||

|

|

@ -49,10 +49,14 @@ window_clause: {

|

|||

SESSION(ts_col, tol_val)

|

||||

| STATE_WINDOW(col)

|

||||

| INTERVAL(interval_val [, interval_offset]) [SLIDING (sliding_val)]

|

||||

| EVENT_WINDOW START WITH start_trigger_condition END WITH end_trigger_condition

|

||||

| COUNT_WINDOW(count_val[, sliding_val])

|

||||

}

|

||||

```

|

||||

|

||||

其中,SESSION 是会话窗口,tol_val 是时间间隔的最大范围。在 tol_val 时间间隔范围内的数据都属于同一个窗口,如果连续的两条数据的时间超过 tol_val,则自动开启下一个窗口。

|

||||

EVENT_WINDOW 是事件窗口,根据开始条件和结束条件来划定窗口。当 start_trigger_condition 满足时则窗口开始,直到 end_trigger_condition 满足时窗口关闭。 start_trigger_condition 和 end_trigger_condition 可以是任意 TDengine 支持的条件表达式,且可以包含不同的列。

|

||||

COUNT_WINDOW 是计数窗口,按固定的数据行数来划分窗口。 count_val 是常量,是正整数,必须大于等于2,小于2147483648。 count_val 表示每个 COUNT_WINDOW 包含的最大数据行数,总数据行数不能整除 count_val 时,最后一个窗口的行数会小于 count_val 。 sliding_val 是常量,表示窗口滑动的数量,类似于 INTERVAL 的 SLIDING 。

|

||||

|

||||

窗口的定义与时序数据特色查询中的定义完全相同,详见 [TDengine 特色查询](../distinguished)

|

||||

|

||||

|

|

@ -61,6 +65,12 @@ window_clause: {

|

|||

```sql

|

||||

CREATE STREAM avg_vol_s INTO avg_vol AS

|

||||

SELECT _wstart, count(*), avg(voltage) FROM meters PARTITION BY tbname INTERVAL(1m) SLIDING(30s);

|

||||

|

||||

CREATE STREAM streams0 INTO streamt0 AS

|

||||

SELECT _wstart, count(*), avg(voltage) from meters PARTITION BY tbname EVENT_WINDOW START WITH voltage < 0 END WITH voltage > 9;

|

||||

|

||||

CREATE STREAM streams1 IGNORE EXPIRED 1 WATERMARK 100s INTO streamt1 AS

|

||||

SELECT _wstart, count(*), avg(voltage) from meters PARTITION BY tbname COUNT_WINDOW(10);

|

||||

```

|

||||

|

||||

## 流式计算的 partition

|

||||

|

|

|

|||

|

|

@ -10,6 +10,10 @@ TDengine 2.x 各版本安装包请访问[这里](https://www.taosdata.com/all-do

|

|||

|

||||

import Release from "/components/ReleaseV3";

|

||||

|

||||

## 3.2.3.0

|

||||

|

||||

<Release type="tdengine" version="3.2.3.0" />

|

||||

|

||||

## 3.2.2.0

|

||||

|

||||

<Release type="tdengine" version="3.2.2.0" />

|

||||

|

|

|

|||

|

|

@ -219,7 +219,6 @@ extern bool tsFilterScalarMode;

|

|||

extern int32_t tsMaxStreamBackendCache;

|

||||

extern int32_t tsPQSortMemThreshold;

|

||||

extern int32_t tsResolveFQDNRetryTime;

|

||||

extern bool tsDisableCount;

|

||||

|

||||

extern bool tsExperimental;

|

||||

// #define NEEDTO_COMPRESSS_MSG(size) (tsCompressMsgSize != -1 && (size) > tsCompressMsgSize)

|

||||

|

|

@ -234,10 +233,10 @@ int32_t taosCfgDynamicOptions(SConfig *pCfg, char *name, bool forServer);

|

|||

|

||||

struct SConfig *taosGetCfg();

|

||||

|

||||

void taosSetAllDebugFlag(int32_t flag);

|

||||

void taosSetDebugFlag(int32_t *pFlagPtr, const char *flagName, int32_t flagVal);

|

||||

void taosLocalCfgForbiddenToChange(char *name, bool *forbidden);

|

||||

int8_t taosGranted(int8_t type);

|

||||

void taosSetGlobalDebugFlag(int32_t flag);

|

||||

void taosSetDebugFlag(int32_t *pFlagPtr, const char *flagName, int32_t flagVal);

|

||||

void taosLocalCfgForbiddenToChange(char *name, bool *forbidden);

|

||||

int8_t taosGranted(int8_t type);

|

||||

|

||||

#ifdef __cplusplus

|

||||

}

|

||||

|

|

|

|||

|

|

@ -581,8 +581,8 @@ typedef struct {

|

|||

};

|

||||

} SSubmitRsp;

|

||||

|

||||

int32_t tEncodeSSubmitRsp(SEncoder* pEncoder, const SSubmitRsp* pRsp);

|

||||

int32_t tDecodeSSubmitRsp(SDecoder* pDecoder, SSubmitRsp* pRsp);

|

||||

// int32_t tEncodeSSubmitRsp(SEncoder* pEncoder, const SSubmitRsp* pRsp);

|

||||

// int32_t tDecodeSSubmitRsp(SDecoder* pDecoder, SSubmitRsp* pRsp);

|

||||

// void tFreeSSubmitBlkRsp(void* param);

|

||||

void tFreeSSubmitRsp(SSubmitRsp* pRsp);

|

||||

|

||||

|

|

@ -885,8 +885,8 @@ typedef struct {

|

|||

int64_t maxStorage;

|

||||

} SCreateAcctReq, SAlterAcctReq;

|

||||

|

||||

int32_t tSerializeSCreateAcctReq(void* buf, int32_t bufLen, SCreateAcctReq* pReq);

|

||||

int32_t tDeserializeSCreateAcctReq(void* buf, int32_t bufLen, SCreateAcctReq* pReq);

|

||||

// int32_t tSerializeSCreateAcctReq(void* buf, int32_t bufLen, SCreateAcctReq* pReq);

|

||||

// int32_t tDeserializeSCreateAcctReq(void* buf, int32_t bufLen, SCreateAcctReq* pReq);

|

||||

|

||||

typedef struct {

|

||||

char user[TSDB_USER_LEN];

|

||||

|

|

@ -3446,7 +3446,7 @@ int32_t tDeserializeSCreateTagIdxReq(void* buf, int32_t bufLen, SCreateTagIndexR

|

|||

|

||||

typedef SMDropSmaReq SDropTagIndexReq;

|

||||

|

||||

int32_t tSerializeSDropTagIdxReq(void* buf, int32_t bufLen, SDropTagIndexReq* pReq);

|

||||

// int32_t tSerializeSDropTagIdxReq(void* buf, int32_t bufLen, SDropTagIndexReq* pReq);

|

||||

int32_t tDeserializeSDropTagIdxReq(void* buf, int32_t bufLen, SDropTagIndexReq* pReq);

|

||||

|

||||

typedef struct {

|

||||

|

|

@ -3567,8 +3567,8 @@ typedef struct {

|

|||

int8_t igNotExists;

|

||||

} SMDropFullTextReq;

|

||||

|

||||

int32_t tSerializeSMDropFullTextReq(void* buf, int32_t bufLen, SMDropFullTextReq* pReq);

|

||||

int32_t tDeserializeSMDropFullTextReq(void* buf, int32_t bufLen, SMDropFullTextReq* pReq);

|

||||

// int32_t tSerializeSMDropFullTextReq(void* buf, int32_t bufLen, SMDropFullTextReq* pReq);

|

||||

// int32_t tDeserializeSMDropFullTextReq(void* buf, int32_t bufLen, SMDropFullTextReq* pReq);

|

||||

|

||||

typedef struct {

|

||||

char indexFName[TSDB_INDEX_FNAME_LEN];

|

||||

|

|

@ -3820,6 +3820,7 @@ typedef struct {

|

|||

uint32_t phyLen;

|

||||

char* sql;

|

||||

char* msg;

|

||||

int8_t source;

|

||||

} SVDeleteReq;

|

||||

|

||||

int32_t tSerializeSVDeleteReq(void* buf, int32_t bufLen, SVDeleteReq* pReq);

|

||||

|

|

@ -3841,6 +3842,7 @@ typedef struct SDeleteRes {

|

|||

char tableFName[TSDB_TABLE_NAME_LEN];

|

||||

char tsColName[TSDB_COL_NAME_LEN];

|

||||

int64_t ctimeMs; // fill by vnode

|

||||

int8_t source;

|

||||

} SDeleteRes;

|

||||

|

||||

int32_t tEncodeDeleteRes(SEncoder* pCoder, const SDeleteRes* pRes);

|

||||

|

|

|

|||

|

|

@ -223,10 +223,10 @@ typedef struct SStoreTqReader {

|

|||

bool (*tqReaderCurrentBlockConsumed)();

|

||||

|

||||

struct SWalReader* (*tqReaderGetWalReader)(); // todo remove it

|

||||

int32_t (*tqReaderRetrieveTaosXBlock)(); // todo remove it

|

||||

// int32_t (*tqReaderRetrieveTaosXBlock)(); // todo remove it

|

||||

|

||||

int32_t (*tqReaderSetSubmitMsg)(); // todo remove it

|

||||

bool (*tqReaderNextBlockFilterOut)();

|

||||

// bool (*tqReaderNextBlockFilterOut)();

|

||||

} SStoreTqReader;

|

||||

|

||||

typedef struct SStoreSnapshotFn {

|

||||

|

|

|

|||

|

|

@ -78,6 +78,7 @@ typedef struct SSchedulerReq {

|

|||

void* chkKillParam;

|

||||

SExecResult* pExecRes;

|

||||

void** pFetchRes;

|

||||

int8_t source;

|

||||

} SSchedulerReq;

|

||||

|

||||

int32_t schedulerInit(void);

|

||||

|

|

|

|||

|

|

@ -56,7 +56,6 @@ extern "C" {

|

|||

#define STREAM_EXEC_T_RESTART_ALL_TASKS (-4)

|

||||

#define STREAM_EXEC_T_STOP_ALL_TASKS (-5)

|

||||

#define STREAM_EXEC_T_RESUME_TASK (-6)

|

||||

#define STREAM_EXEC_T_UPDATE_TASK_EPSET (-7)

|

||||

|

||||

typedef struct SStreamTask SStreamTask;

|

||||

typedef struct SStreamQueue SStreamQueue;

|

||||

|

|

@ -783,11 +782,14 @@ bool streamTaskIsAllUpstreamClosed(SStreamTask* pTask);

|

|||

bool streamTaskSetSchedStatusWait(SStreamTask* pTask);

|

||||

int8_t streamTaskSetSchedStatusActive(SStreamTask* pTask);

|

||||

int8_t streamTaskSetSchedStatusInactive(SStreamTask* pTask);

|

||||

int32_t streamTaskClearHTaskAttr(SStreamTask* pTask, int32_t clearRelHalt, bool metaLock);

|

||||

int32_t streamTaskClearHTaskAttr(SStreamTask* pTask, int32_t clearRelHalt);

|

||||

|

||||

int32_t streamTaskHandleEvent(SStreamTaskSM* pSM, EStreamTaskEvent event);

|

||||

int32_t streamTaskOnHandleEventSuccess(SStreamTaskSM* pSM, EStreamTaskEvent event);

|

||||

void streamTaskRestoreStatus(SStreamTask* pTask);

|

||||

|

||||

typedef int32_t (*__state_trans_user_fn)(SStreamTask*, void* param);

|

||||

int32_t streamTaskHandleEventAsync(SStreamTaskSM* pSM, EStreamTaskEvent event, __state_trans_user_fn callbackFn, void* param);

|

||||

int32_t streamTaskOnHandleEventSuccess(SStreamTaskSM* pSM, EStreamTaskEvent event, __state_trans_user_fn callbackFn, void* param);

|

||||

int32_t streamTaskRestoreStatus(SStreamTask* pTask);

|

||||

|

||||

int32_t streamSendCheckRsp(const SStreamMeta* pMeta, const SStreamTaskCheckReq* pReq, SStreamTaskCheckRsp* pRsp,

|

||||

SRpcHandleInfo* pRpcInfo, int32_t taskId);

|

||||

|

|

|

|||

|

|

@ -433,7 +433,7 @@ int32_t* taosGetErrno();

|

|||

|

||||

//mnode-compact

|

||||

#define TSDB_CODE_MND_INVALID_COMPACT_ID TAOS_DEF_ERROR_CODE(0, 0x04B1)

|

||||

|

||||

#define TSDB_CODE_MND_COMPACT_DETAIL_NOT_EXIST TAOS_DEF_ERROR_CODE(0, 0x04B2)

|

||||

|

||||

// vnode

|

||||

// #define TSDB_CODE_VND_ACTION_IN_PROGRESS TAOS_DEF_ERROR_CODE(0, 0x0500) // 2.x

|

||||

|

|

|

|||

|

|

@ -187,6 +187,8 @@ typedef enum ELogicConditionType {

|

|||

LOGIC_COND_TYPE_NOT,

|

||||

} ELogicConditionType;

|

||||

|

||||

#define TSDB_INT32_ID_LEN 11

|

||||

|

||||

#define TSDB_NAME_DELIMITER_LEN 1

|

||||

|

||||

#define TSDB_UNI_LEN 24

|

||||

|

|

|

|||

|

|

@ -72,40 +72,6 @@ struct STaosQnode {

|

|||

char item[];

|

||||

};

|

||||

|

||||

struct STaosQueue {

|

||||

STaosQnode *head;

|

||||

STaosQnode *tail;

|

||||

STaosQueue *next; // for queue set

|

||||

STaosQset *qset; // for queue set

|

||||

void *ahandle; // for queue set

|

||||

FItem itemFp;

|

||||

FItems itemsFp;

|

||||

TdThreadMutex mutex;

|

||||

int64_t memOfItems;

|

||||

int32_t numOfItems;

|

||||

int64_t threadId;

|

||||

int64_t memLimit;

|

||||

int64_t itemLimit;

|

||||

};

|

||||

|

||||

struct STaosQset {

|

||||

STaosQueue *head;

|

||||

STaosQueue *current;

|

||||

TdThreadMutex mutex;

|

||||

tsem_t sem;

|

||||

int32_t numOfQueues;

|

||||

int32_t numOfItems;

|

||||

};

|

||||

|

||||

struct STaosQall {

|

||||

STaosQnode *current;

|

||||

STaosQnode *start;

|

||||

int32_t numOfItems;

|

||||

int64_t memOfItems;

|

||||

int32_t unAccessedNumOfItems;

|

||||

int64_t unAccessMemOfItems;

|

||||

};

|

||||

|

||||

STaosQueue *taosOpenQueue();

|

||||

void taosCloseQueue(STaosQueue *queue);

|

||||

void taosSetQueueFp(STaosQueue *queue, FItem itemFp, FItems itemsFp);

|

||||

|

|

@ -140,6 +106,8 @@ int32_t taosGetQueueNumber(STaosQset *qset);

|

|||

int32_t taosReadQitemFromQset(STaosQset *qset, void **ppItem, SQueueInfo *qinfo);

|

||||

int32_t taosReadAllQitemsFromQset(STaosQset *qset, STaosQall *qall, SQueueInfo *qinfo);

|

||||

void taosResetQsetThread(STaosQset *qset, void *pItem);

|

||||

void taosQueueSetThreadId(STaosQueue *pQueue, int64_t threadId);

|

||||

int64_t taosQueueGetThreadId(STaosQueue *pQueue);

|

||||

|

||||

#ifdef __cplusplus

|

||||

}

|

||||

|

|

|

|||

|

|

@ -26,6 +26,8 @@ typedef struct SScalableBf {

|

|||

SArray *bfArray; // array of bloom filters

|

||||

uint32_t growth;

|

||||

uint64_t numBits;

|

||||

uint32_t maxBloomFilters;

|

||||

int8_t status;

|

||||

_hash_fn_t hashFn1;

|

||||

_hash_fn_t hashFn2;

|

||||

} SScalableBf;

|

||||

|

|

|

|||

|

|

@ -284,6 +284,7 @@ typedef struct SRequestObj {

|

|||

void* pWrapper;

|

||||

SMetaData parseMeta;

|

||||

char* effectiveUser;

|

||||

int8_t source;

|

||||

} SRequestObj;

|

||||

|

||||

typedef struct SSyncQueryParam {

|

||||

|

|

@ -306,10 +307,10 @@ void doFreeReqResultInfo(SReqResultInfo* pResInfo);

|

|||

int32_t transferTableNameList(const char* tbList, int32_t acctId, char* dbName, SArray** pReq);

|

||||

void syncCatalogFn(SMetaData* pResult, void* param, int32_t code);

|

||||

|

||||

TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly);

|

||||

TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly, int8_t source);

|

||||

TAOS_RES* taosQueryImplWithReqid(TAOS* taos, const char* sql, bool validateOnly, int64_t reqid);

|

||||

|

||||

void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly);

|

||||

void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly, int8_t source);

|

||||

void taosAsyncQueryImplWithReqid(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly,

|

||||

int64_t reqid);

|

||||

void taosAsyncFetchImpl(SRequestObj *pRequest, __taos_async_fn_t fp, void *param);

|

||||

|

|

@ -354,6 +355,7 @@ SRequestObj* acquireRequest(int64_t rid);

|

|||

int32_t releaseRequest(int64_t rid);

|

||||

int32_t removeRequest(int64_t rid);

|

||||

void doDestroyRequest(void* p);

|

||||

int64_t removeFromMostPrevReq(SRequestObj* pRequest);

|

||||

|

||||

char* getDbOfConnection(STscObj* pObj);

|

||||

void setConnectionDB(STscObj* pTscObj, const char* db);

|

||||

|

|

|

|||

|

|

@ -80,7 +80,7 @@ extern "C" {

|

|||

#define IS_SAME_KEY (maxKV->type == kv->type && maxKV->keyLen == kv->keyLen && memcmp(maxKV->key, kv->key, kv->keyLen) == 0)

|

||||

|

||||

#define IS_SLASH_LETTER_IN_MEASUREMENT(sql) \

|

||||

(*((sql)-1) == SLASH && (*(sql) == COMMA || *(sql) == SPACE))

|

||||

(*((sql)-1) == SLASH && (*(sql) == COMMA || *(sql) == SPACE || *(sql) == SLASH))

|

||||

|

||||

#define MOVE_FORWARD_ONE(sql, len) (memmove((void *)((sql)-1), (sql), len))

|

||||

|

||||

|

|

|

|||

|

|

@ -385,6 +385,33 @@ int32_t releaseRequest(int64_t rid) { return taosReleaseRef(clientReqRefPool, ri

|

|||

|

||||

int32_t removeRequest(int64_t rid) { return taosRemoveRef(clientReqRefPool, rid); }

|

||||

|

||||

/// return the most previous req ref id

|

||||

int64_t removeFromMostPrevReq(SRequestObj* pRequest) {

|

||||

int64_t mostPrevReqRefId = pRequest->self;

|

||||

SRequestObj* pTmp = pRequest;

|

||||

while (pTmp->relation.prevRefId) {

|

||||

pTmp = acquireRequest(pTmp->relation.prevRefId);

|

||||

if (pTmp) {

|

||||

mostPrevReqRefId = pTmp->self;

|

||||

releaseRequest(mostPrevReqRefId);

|

||||

} else {

|

||||

break;

|

||||

}

|

||||

}

|

||||

removeRequest(mostPrevReqRefId);

|

||||

return mostPrevReqRefId;

|

||||

}

|

||||

|

||||

void destroyNextReq(int64_t nextRefId) {

|

||||

if (nextRefId) {

|

||||

SRequestObj* pObj = acquireRequest(nextRefId);

|

||||

if (pObj) {

|

||||

releaseRequest(nextRefId);

|

||||

releaseRequest(nextRefId);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

void destroySubRequests(SRequestObj *pRequest) {

|

||||

int32_t reqIdx = -1;

|

||||

SRequestObj *pReqList[16] = {NULL};

|

||||

|

|

@ -435,7 +462,7 @@ void doDestroyRequest(void *p) {

|

|||

uint64_t reqId = pRequest->requestId;

|

||||

tscTrace("begin to destroy request %" PRIx64 " p:%p", reqId, pRequest);

|

||||

|

||||

destroySubRequests(pRequest);

|

||||

int64_t nextReqRefId = pRequest->relation.nextRefId;

|

||||

|

||||

taosHashRemove(pRequest->pTscObj->pRequests, &pRequest->self, sizeof(pRequest->self));

|

||||

|

||||

|

|

@ -471,6 +498,7 @@ void doDestroyRequest(void *p) {

|

|||

taosMemoryFreeClear(pRequest->sqlstr);

|

||||

taosMemoryFree(pRequest);

|

||||

tscTrace("end to destroy request %" PRIx64 " p:%p", reqId, pRequest);

|

||||

destroyNextReq(nextReqRefId);

|

||||

}

|

||||

|

||||

void destroyRequest(SRequestObj *pRequest) {

|

||||

|

|

@ -479,7 +507,7 @@ void destroyRequest(SRequestObj *pRequest) {

|

|||

}

|

||||

|

||||

taos_stop_query(pRequest);

|

||||

removeRequest(pRequest->self);

|

||||

removeFromMostPrevReq(pRequest);

|

||||

}

|

||||

|

||||

void taosStopQueryImpl(SRequestObj *pRequest) {

|

||||

|

|

|

|||

|

|

@ -743,6 +743,7 @@ int32_t scheduleQuery(SRequestObj* pRequest, SQueryPlan* pDag, SArray* pNodeList

|

|||

.chkKillFp = chkRequestKilled,

|

||||

.chkKillParam = (void*)pRequest->self,

|

||||

.pExecRes = &res,

|

||||

.source = pRequest->source,

|

||||

};

|

||||

|

||||

int32_t code = schedulerExecJob(&req, &pRequest->body.queryJob);

|

||||

|

|

@ -1212,6 +1213,7 @@ static int32_t asyncExecSchQuery(SRequestObj* pRequest, SQuery* pQuery, SMetaDat

|

|||

.chkKillFp = chkRequestKilled,

|

||||

.chkKillParam = (void*)pRequest->self,

|

||||

.pExecRes = NULL,

|

||||

.source = pRequest->source,

|

||||

};

|

||||

code = schedulerExecJob(&req, &pRequest->body.queryJob);

|

||||

taosArrayDestroy(pNodeList);

|

||||

|

|

@ -2475,7 +2477,7 @@ void syncQueryFn(void* param, void* res, int32_t code) {

|

|||

tsem_post(&pParam->sem);

|

||||

}

|

||||

|

||||

void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly) {

|

||||

void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly, int8_t source) {

|

||||

if (sql == NULL || NULL == fp) {

|

||||

terrno = TSDB_CODE_INVALID_PARA;

|

||||

if (fp) {

|

||||

|

|

@ -2501,6 +2503,7 @@ void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp,

|

|||

return;

|

||||

}

|

||||

|

||||

pRequest->source = source;

|

||||

pRequest->body.queryFp = fp;

|

||||

doAsyncQuery(pRequest, false);

|

||||

}

|

||||

|

|

@ -2535,7 +2538,7 @@ void taosAsyncQueryImplWithReqid(uint64_t connId, const char* sql, __taos_async_

|

|||

doAsyncQuery(pRequest, false);

|

||||

}

|

||||

|

||||

TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly) {

|

||||

TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly, int8_t source) {

|

||||

if (NULL == taos) {

|

||||

terrno = TSDB_CODE_TSC_DISCONNECTED;

|

||||

return NULL;

|

||||

|

|

@ -2550,7 +2553,7 @@ TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly) {

|

|||

}

|

||||

tsem_init(¶m->sem, 0, 0);

|

||||

|

||||

taosAsyncQueryImpl(*(int64_t*)taos, sql, syncQueryFn, param, validateOnly);

|

||||

taosAsyncQueryImpl(*(int64_t*)taos, sql, syncQueryFn, param, validateOnly, source);

|

||||

tsem_wait(¶m->sem);

|

||||

|

||||

SRequestObj* pRequest = NULL;

|

||||

|

|

|

|||

|

|

@ -402,7 +402,7 @@ TAOS_FIELD *taos_fetch_fields(TAOS_RES *res) {

|

|||

return pResInfo->userFields;

|

||||

}

|

||||

|

||||

TAOS_RES *taos_query(TAOS *taos, const char *sql) { return taosQueryImpl(taos, sql, false); }

|

||||

TAOS_RES *taos_query(TAOS *taos, const char *sql) { return taosQueryImpl(taos, sql, false, TD_REQ_FROM_APP); }

|

||||

TAOS_RES *taos_query_with_reqid(TAOS *taos, const char *sql, int64_t reqid) {

|

||||

return taosQueryImplWithReqid(taos, sql, false, reqid);

|

||||

}

|

||||

|

|

@ -828,7 +828,7 @@ int *taos_get_column_data_offset(TAOS_RES *res, int columnIndex) {

|

|||

}

|

||||

|

||||

int taos_validate_sql(TAOS *taos, const char *sql) {

|

||||

TAOS_RES *pObj = taosQueryImpl(taos, sql, true);

|

||||

TAOS_RES *pObj = taosQueryImpl(taos, sql, true, TD_REQ_FROM_APP);

|

||||

|

||||

int code = taos_errno(pObj);

|

||||

|

||||

|

|

@ -1126,7 +1126,7 @@ void continueInsertFromCsv(SSqlCallbackWrapper *pWrapper, SRequestObj *pRequest)

|

|||

void taos_query_a(TAOS *taos, const char *sql, __taos_async_fn_t fp, void *param) {

|

||||

int64_t connId = *(int64_t *)taos;

|

||||

tscDebug("taos_query_a start with sql:%s", sql);

|

||||

taosAsyncQueryImpl(connId, sql, fp, param, false);

|

||||

taosAsyncQueryImpl(connId, sql, fp, param, false, TD_REQ_FROM_APP);

|

||||

tscDebug("taos_query_a end with sql:%s", sql);

|

||||

}

|

||||

|

||||

|

|

@ -1254,54 +1254,34 @@ void doAsyncQuery(SRequestObj *pRequest, bool updateMetaForce) {

|

|||

}

|

||||

|

||||

void restartAsyncQuery(SRequestObj *pRequest, int32_t code) {

|

||||

int32_t reqIdx = 0;

|

||||

SRequestObj *pReqList[16] = {NULL};

|

||||

SRequestObj *pUserReq = NULL;

|

||||

pReqList[0] = pRequest;

|

||||

uint64_t tmpRefId = 0;

|

||||

SRequestObj *pTmp = pRequest;

|

||||

while (pTmp->relation.prevRefId) {

|

||||

tmpRefId = pTmp->relation.prevRefId;

|

||||

pTmp = acquireRequest(tmpRefId);

|

||||

if (pTmp) {

|

||||

pReqList[++reqIdx] = pTmp;

|

||||

releaseRequest(tmpRefId);

|

||||

} else {

|

||||

tscError("prev req ref 0x%" PRIx64 " is not there", tmpRefId);

|

||||

tscInfo("restart request: %s p: %p", pRequest->sqlstr, pRequest);

|

||||

SRequestObj* pUserReq = pRequest;

|

||||

acquireRequest(pRequest->self);

|

||||

while (pUserReq) {

|

||||

if (pUserReq->self == pUserReq->relation.userRefId || pUserReq->relation.userRefId == 0) {

|

||||

break;

|

||||

}

|

||||

}

|

||||

|

||||

tmpRefId = pRequest->relation.nextRefId;

|

||||

while (tmpRefId) {

|

||||

pTmp = acquireRequest(tmpRefId);

|

||||

if (pTmp) {

|

||||

tmpRefId = pTmp->relation.nextRefId;

|

||||

removeRequest(pTmp->self);

|

||||

releaseRequest(pTmp->self);

|

||||

} else {

|

||||

tscError("next req ref 0x%" PRIx64 " is not there", tmpRefId);

|

||||

break;

|

||||

int64_t nextRefId = pUserReq->relation.nextRefId;

|

||||

releaseRequest(pUserReq->self);

|

||||

if (nextRefId) {

|

||||

pUserReq = acquireRequest(nextRefId);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

for (int32_t i = reqIdx; i >= 0; i--) {

|

||||

destroyCtxInRequest(pReqList[i]);

|

||||

if (pReqList[i]->relation.userRefId == pReqList[i]->self || 0 == pReqList[i]->relation.userRefId) {

|

||||

pUserReq = pReqList[i];

|

||||

} else {

|

||||

removeRequest(pReqList[i]->self);

|

||||

}

|

||||

}

|

||||

|

||||

bool hasSubRequest = pUserReq != pRequest || pRequest->relation.prevRefId != 0;

|

||||

if (pUserReq) {

|

||||

destroyCtxInRequest(pUserReq);

|

||||

pUserReq->prevCode = code;

|

||||

memset(&pUserReq->relation, 0, sizeof(pUserReq->relation));

|

||||

} else {

|

||||

tscError("user req is missing");

|

||||

tscError("User req is missing");

|

||||

removeFromMostPrevReq(pRequest);

|

||||

return;

|

||||

}

|

||||

|

||||

if (hasSubRequest)

|

||||

removeFromMostPrevReq(pRequest);

|

||||

else

|

||||

releaseRequest(pUserReq->self);

|

||||

doAsyncQuery(pUserReq, true);

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -1256,7 +1256,7 @@ static int32_t taosDeleteData(TAOS* taos, void* meta, int32_t metaLen) {

|

|||

snprintf(sql, sizeof(sql), "delete from `%s` where `%s` >= %" PRId64 " and `%s` <= %" PRId64, req.tableFName,

|

||||

req.tsColName, req.skey, req.tsColName, req.ekey);

|

||||

|

||||

TAOS_RES* res = taos_query(taos, sql);

|

||||

TAOS_RES* res = taosQueryImpl(taos, sql, false, TD_REQ_FROM_TAOX);

|

||||

SRequestObj* pRequest = (SRequestObj*)res;

|

||||

code = pRequest->code;

|

||||

if (code == TSDB_CODE_PAR_TABLE_NOT_EXIST || code == TSDB_CODE_PAR_GET_META_ERROR) {

|

||||

|

|

|

|||

|

|

@ -20,14 +20,14 @@

|

|||

|

||||

#include "clientSml.h"

|

||||

|

||||

#define IS_COMMA(sql) (*(sql) == COMMA && *((sql)-1) != SLASH)

|

||||

#define IS_SPACE(sql) (*(sql) == SPACE && *((sql)-1) != SLASH)

|

||||

#define IS_EQUAL(sql) (*(sql) == EQUAL && *((sql)-1) != SLASH)

|

||||

#define IS_COMMA(sql,escapeChar) (*(sql) == COMMA && (*((sql)-1) != SLASH || ((sql)-1 == escapeChar)))

|

||||

#define IS_SPACE(sql,escapeChar) (*(sql) == SPACE && (*((sql)-1) != SLASH || ((sql)-1 == escapeChar)))

|

||||

#define IS_EQUAL(sql,escapeChar) (*(sql) == EQUAL && (*((sql)-1) != SLASH || ((sql)-1 == escapeChar)))

|

||||

|

||||

#define IS_SLASH_LETTER_IN_FIELD_VALUE(sql) (*((sql)-1) == SLASH && (*(sql) == QUOTE || *(sql) == SLASH))

|

||||

|

||||

#define IS_SLASH_LETTER_IN_TAG_FIELD_KEY(sql) \

|

||||

(*((sql)-1) == SLASH && (*(sql) == COMMA || *(sql) == SPACE || *(sql) == EQUAL))

|

||||

(*((sql)-1) == SLASH && (*(sql) == COMMA || *(sql) == SPACE || *(sql) == EQUAL || *(sql) == SLASH))

|

||||

|

||||

#define PROCESS_SLASH_IN_FIELD_VALUE(key, keyLen) \

|

||||

for (int i = 1; i < keyLen; ++i) { \

|

||||

|

|

@ -198,7 +198,7 @@ static int32_t smlProcessTagLine(SSmlHandle *info, char **sql, char *sqlEnd){

|

|||

int cnt = 0;

|

||||

|

||||

while (*sql < sqlEnd) {

|

||||

if (unlikely(IS_SPACE(*sql))) {

|

||||

if (unlikely(IS_SPACE(*sql,NULL))) {

|

||||

break;

|

||||

}

|

||||

|

||||

|

|

@ -207,18 +207,21 @@ static int32_t smlProcessTagLine(SSmlHandle *info, char **sql, char *sqlEnd){

|

|||

size_t keyLen = 0;

|

||||

bool keyEscaped = false;

|

||||

size_t keyLenEscaped = 0;

|

||||

const char *escapeChar = NULL;

|

||||

|

||||

while (*sql < sqlEnd) {

|

||||

if (unlikely(IS_SPACE(*sql) || IS_COMMA(*sql))) {

|

||||

if (unlikely(IS_SPACE(*sql,escapeChar) || IS_COMMA(*sql,escapeChar))) {

|

||||

smlBuildInvalidDataMsg(&info->msgBuf, "invalid data", *sql);

|

||||

terrno = TSDB_CODE_SML_INVALID_DATA;

|

||||

return -1;

|

||||

}

|

||||

if (unlikely(IS_EQUAL(*sql))) {

|

||||

if (unlikely(IS_EQUAL(*sql,escapeChar))) {

|

||||

keyLen = *sql - key;

|

||||

(*sql)++;

|

||||

break;

|

||||

}

|

||||

if (IS_SLASH_LETTER_IN_TAG_FIELD_KEY(*sql)) {

|

||||

escapeChar = *sql;

|

||||

keyLenEscaped++;

|

||||

keyEscaped = true;

|

||||

}

|

||||

|

|

@ -238,15 +241,16 @@ static int32_t smlProcessTagLine(SSmlHandle *info, char **sql, char *sqlEnd){

|

|||

size_t valueLenEscaped = 0;

|

||||

while (*sql < sqlEnd) {

|

||||

// parse value

|

||||

if (unlikely(IS_SPACE(*sql) || IS_COMMA(*sql))) {

|

||||

if (unlikely(IS_SPACE(*sql,escapeChar) || IS_COMMA(*sql,escapeChar))) {

|

||||

break;

|

||||

} else if (unlikely(IS_EQUAL(*sql))) {

|

||||

} else if (unlikely(IS_EQUAL(*sql,escapeChar))) {

|

||||

smlBuildInvalidDataMsg(&info->msgBuf, "invalid data", *sql);

|

||||

terrno = TSDB_CODE_SML_INVALID_DATA;

|

||||

return -1;

|

||||

}

|

||||

|

||||

if (IS_SLASH_LETTER_IN_TAG_FIELD_KEY(*sql)) {

|

||||

escapeChar = *sql;

|

||||

valueLenEscaped++;

|

||||

valueEscaped = true;

|

||||

}

|

||||

|

|

@ -293,7 +297,7 @@ static int32_t smlProcessTagLine(SSmlHandle *info, char **sql, char *sqlEnd){

|

|||

}

|

||||

|

||||

cnt++;

|

||||

if (IS_SPACE(*sql)) {

|

||||

if (IS_SPACE(*sql,escapeChar)) {

|

||||

break;

|

||||

}

|

||||

(*sql)++;

|

||||

|

|

@ -326,7 +330,7 @@ static int32_t smlParseTagLine(SSmlHandle *info, char **sql, char *sqlEnd, SSmlL

|

|||

static int32_t smlParseColLine(SSmlHandle *info, char **sql, char *sqlEnd, SSmlLineInfo *currElement) {

|

||||

int cnt = 0;

|

||||

while (*sql < sqlEnd) {

|

||||

if (unlikely(IS_SPACE(*sql))) {

|

||||

if (unlikely(IS_SPACE(*sql,NULL))) {

|

||||

break;

|

||||

}

|

||||

|

||||

|

|

@ -335,17 +339,19 @@ static int32_t smlParseColLine(SSmlHandle *info, char **sql, char *sqlEnd, SSmlL

|

|||

size_t keyLen = 0;

|

||||

bool keyEscaped = false;

|

||||

size_t keyLenEscaped = 0;

|

||||

const char *escapeChar = NULL;

|

||||

while (*sql < sqlEnd) {

|

||||

if (unlikely(IS_SPACE(*sql) || IS_COMMA(*sql))) {

|

||||

if (unlikely(IS_SPACE(*sql,escapeChar) || IS_COMMA(*sql,escapeChar))) {

|

||||

smlBuildInvalidDataMsg(&info->msgBuf, "invalid data", *sql);

|

||||

return TSDB_CODE_SML_INVALID_DATA;

|

||||

}

|

||||

if (unlikely(IS_EQUAL(*sql))) {

|

||||

if (unlikely(IS_EQUAL(*sql,escapeChar))) {

|

||||

keyLen = *sql - key;

|

||||

(*sql)++;

|

||||

break;

|

||||

}

|

||||

if (IS_SLASH_LETTER_IN_TAG_FIELD_KEY(*sql)) {

|

||||

escapeChar = *sql;

|

||||

keyLenEscaped++;

|

||||

keyEscaped = true;

|

||||

}

|

||||

|

|

@ -363,7 +369,6 @@ static int32_t smlParseColLine(SSmlHandle *info, char **sql, char *sqlEnd, SSmlL

|

|||

bool valueEscaped = false;

|

||||

size_t valueLenEscaped = 0;

|

||||

int quoteNum = 0;

|

||||

const char *escapeChar = NULL;

|

||||

while (*sql < sqlEnd) {

|

||||

// parse value

|

||||

if (unlikely(*(*sql) == QUOTE && (*(*sql - 1) != SLASH || (*sql - 1) == escapeChar))) {

|

||||

|

|

@ -374,7 +379,7 @@ static int32_t smlParseColLine(SSmlHandle *info, char **sql, char *sqlEnd, SSmlL

|

|||

}

|

||||

continue;

|

||||

}

|

||||

if (quoteNum % 2 == 0 && (unlikely(IS_SPACE(*sql) || IS_COMMA(*sql)))) {

|

||||

if (quoteNum % 2 == 0 && (unlikely(IS_SPACE(*sql,escapeChar) || IS_COMMA(*sql,escapeChar)))) {

|

||||

break;

|

||||

}

|

||||

if (IS_SLASH_LETTER_IN_FIELD_VALUE(*sql) && (*sql - 1) != escapeChar) {

|

||||

|

|

@ -437,7 +442,7 @@ static int32_t smlParseColLine(SSmlHandle *info, char **sql, char *sqlEnd, SSmlL

|

|||

}

|

||||

|

||||

cnt++;

|

||||

if (IS_SPACE(*sql)) {

|

||||

if (IS_SPACE(*sql,escapeChar)) {

|

||||

break;

|

||||

}

|

||||

(*sql)++;

|

||||

|

|

@ -453,19 +458,18 @@ int32_t smlParseInfluxString(SSmlHandle *info, char *sql, char *sqlEnd, SSmlLine

|

|||

elements->measure = sql;

|

||||

// parse measure

|

||||

size_t measureLenEscaped = 0;

|

||||

const char *escapeChar = NULL;

|

||||

while (sql < sqlEnd) {

|

||||

if (unlikely((sql != elements->measure) && IS_SLASH_LETTER_IN_MEASUREMENT(sql))) {

|

||||

elements->measureEscaped = true;

|

||||

measureLenEscaped++;

|

||||

sql++;

|

||||

continue;

|

||||

}

|

||||

if (unlikely(IS_COMMA(sql))) {

|

||||

if (unlikely(IS_COMMA(sql,escapeChar) || IS_SPACE(sql,escapeChar))) {

|

||||

break;

|

||||

}

|

||||

|

||||

if (unlikely(IS_SPACE(sql))) {

|

||||

break;

|

||||

if (unlikely((sql != elements->measure) && IS_SLASH_LETTER_IN_MEASUREMENT(sql))) {

|

||||

elements->measureEscaped = true;

|

||||

escapeChar = sql;

|

||||

measureLenEscaped++;

|

||||

sql++;

|

||||

continue;

|

||||

}

|

||||

sql++;

|

||||

}

|

||||

|

|

@ -478,9 +482,12 @@ int32_t smlParseInfluxString(SSmlHandle *info, char *sql, char *sqlEnd, SSmlLine

|

|||

// to get measureTagsLen before

|

||||

const char *tmp = sql;

|

||||

while (tmp < sqlEnd) {

|

||||

if (unlikely(IS_SPACE(tmp))) {

|

||||

if (unlikely(IS_SPACE(tmp,escapeChar))) {

|

||||

break;

|

||||

}

|

||||

if(unlikely(IS_SLASH_LETTER_IN_TAG_FIELD_KEY(tmp))){

|

||||

escapeChar = tmp;

|

||||

}

|

||||

tmp++;

|

||||

}

|

||||

elements->measureTagsLen = tmp - elements->measure;

|

||||

|

|

|

|||

|

|

@ -876,12 +876,13 @@ int32_t tmqHandleAllDelayedTask(tmq_t* pTmq) {

|

|||

STaosQall* qall = taosAllocateQall();

|

||||

taosReadAllQitems(pTmq->delayedTask, qall);

|

||||

|

||||

if (qall->numOfItems == 0) {

|

||||

int32_t numOfItems = taosQallItemSize(qall);

|

||||

if (numOfItems == 0) {

|

||||

taosFreeQall(qall);

|

||||

return TSDB_CODE_SUCCESS;

|

||||

}

|

||||

|

||||

tscDebug("consumer:0x%" PRIx64 " handle delayed %d tasks before poll data", pTmq->consumerId, qall->numOfItems);

|

||||

tscDebug("consumer:0x%" PRIx64 " handle delayed %d tasks before poll data", pTmq->consumerId, numOfItems);

|

||||

int8_t* pTaskType = NULL;

|

||||

taosGetQitem(qall, (void**)&pTaskType);

|

||||

|

||||

|

|

@ -1009,19 +1010,8 @@ int32_t tmq_unsubscribe(tmq_t* tmq) {

|

|||

}

|

||||

taosSsleep(2); // sleep 2s for hb to send offset and rows to server

|

||||

|

||||

int32_t rsp;

|

||||

int32_t retryCnt = 0;

|

||||

tmq_list_t* lst = tmq_list_new();

|

||||

while (1) {

|

||||

rsp = tmq_subscribe(tmq, lst);

|

||||

if (rsp != TSDB_CODE_MND_CONSUMER_NOT_READY || retryCnt > 5) {

|

||||

break;

|

||||

} else {

|

||||

retryCnt++;

|

||||

taosMsleep(500);

|

||||

}

|

||||

}

|

||||

|

||||

int32_t rsp = tmq_subscribe(tmq, lst);

|

||||

tmq_list_destroy(lst);

|

||||

return rsp;

|

||||

}

|

||||

|

|

@ -1271,10 +1261,9 @@ int32_t tmq_subscribe(tmq_t* tmq, const tmq_list_t* topic_list) {

|

|||

}

|

||||

|

||||

int32_t retryCnt = 0;

|

||||

while (syncAskEp(tmq) != 0) {

|

||||

if (retryCnt++ > MAX_RETRY_COUNT) {

|

||||

while ((code = syncAskEp(tmq)) != 0) {

|

||||

if (retryCnt++ > MAX_RETRY_COUNT || code == TSDB_CODE_MND_CONSUMER_NOT_EXIST) {

|

||||

tscError("consumer:0x%" PRIx64 ", mnd not ready for subscribe, retry more than 2 minutes", tmq->consumerId);

|

||||

code = TSDB_CODE_MND_CONSUMER_NOT_READY;

|

||||

goto FAIL;

|

||||

}

|

||||

|

||||

|

|

@ -1839,7 +1828,7 @@ static void updateVgInfo(SMqClientVg* pVg, STqOffsetVal* reqOffset, STqOffsetVal

|

|||

}

|

||||

|

||||

static void* tmqHandleAllRsp(tmq_t* tmq, int64_t timeout) {

|

||||

tscDebug("consumer:0x%" PRIx64 " start to handle the rsp, total:%d", tmq->consumerId, tmq->qall->numOfItems);

|

||||

tscDebug("consumer:0x%" PRIx64 " start to handle the rsp, total:%d", tmq->consumerId, taosQallItemSize(tmq->qall));

|

||||

|

||||

while (1) {

|

||||

SMqRspWrapper* pRspWrapper = NULL;

|

||||

|

|

@ -2147,26 +2136,19 @@ int32_t tmq_consumer_close(tmq_t* tmq) {

|

|||

if (tmq->status == TMQ_CONSUMER_STATUS__READY) {

|

||||

// if auto commit is set, commit before close consumer. Otherwise, do nothing.

|

||||

if (tmq->autoCommit) {

|

||||

int32_t rsp = tmq_commit_sync(tmq, NULL);

|

||||

if (rsp != 0) {

|

||||

return rsp;

|

||||

int32_t code = tmq_commit_sync(tmq, NULL);

|

||||

if (code != 0) {

|

||||

return code;

|

||||

}

|

||||

}

|

||||

taosSsleep(2); // sleep 2s for hb to send offset and rows to server

|

||||

|

||||

int32_t retryCnt = 0;

|

||||

tmq_list_t* lst = tmq_list_new();

|

||||

while (1) {

|

||||

int32_t rsp = tmq_subscribe(tmq, lst);

|

||||

if (rsp != TSDB_CODE_MND_CONSUMER_NOT_READY || retryCnt > 5) {

|

||||

break;

|

||||

} else {

|

||||

retryCnt++;

|

||||

taosMsleep(500);

|

||||

}

|

||||

}

|

||||

|

||||

int32_t code = tmq_subscribe(tmq, lst);

|

||||

tmq_list_destroy(lst);

|

||||

if (code != 0) {

|

||||

return code;

|

||||

}

|

||||

} else {

|

||||

tscInfo("consumer:0x%" PRIx64 " not in ready state, close it directly", tmq->consumerId);

|

||||

}

|

||||

|

|

|

|||

|

|

@ -452,20 +452,21 @@ int32_t colDataAssign(SColumnInfoData* pColumnInfoData, const SColumnInfoData* p

|

|||

}

|

||||

|

||||

if (IS_VAR_DATA_TYPE(pColumnInfoData->info.type)) {

|

||||

int32_t newLen = pSource->varmeta.length;

|

||||

memcpy(pColumnInfoData->varmeta.offset, pSource->varmeta.offset, sizeof(int32_t) * numOfRows);

|

||||

if (pColumnInfoData->varmeta.allocLen < pSource->varmeta.length) {

|

||||

char* tmp = taosMemoryRealloc(pColumnInfoData->pData, pSource->varmeta.length);

|

||||

if (pColumnInfoData->varmeta.allocLen < newLen) {

|

||||

char* tmp = taosMemoryRealloc(pColumnInfoData->pData, newLen);

|

||||

if (tmp == NULL) {

|

||||

return TSDB_CODE_OUT_OF_MEMORY;

|

||||

}

|

||||

|

||||

pColumnInfoData->pData = tmp;

|

||||

pColumnInfoData->varmeta.allocLen = pSource->varmeta.length;

|

||||

pColumnInfoData->varmeta.allocLen = newLen;

|

||||

}

|

||||

|

||||

pColumnInfoData->varmeta.length = pSource->varmeta.length;

|

||||

pColumnInfoData->varmeta.length = newLen;

|

||||

if (pColumnInfoData->pData != NULL && pSource->pData != NULL) {

|

||||

memcpy(pColumnInfoData->pData, pSource->pData, pSource->varmeta.length);

|

||||

memcpy(pColumnInfoData->pData, pSource->pData, newLen);

|

||||

}

|

||||

} else {

|

||||

memcpy(pColumnInfoData->nullbitmap, pSource->nullbitmap, BitmapLen(numOfRows));

|

||||

|

|

@ -1687,7 +1688,29 @@ int32_t blockDataTrimFirstRows(SSDataBlock* pBlock, size_t n) {

|

|||

}

|

||||

|

||||

static void colDataKeepFirstNRows(SColumnInfoData* pColInfoData, size_t n, size_t total) {

|

||||

if (n >= total || n == 0) return;

|

||||

if (IS_VAR_DATA_TYPE(pColInfoData->info.type)) {

|

||||

if (pColInfoData->varmeta.length != 0) {

|

||||

int32_t newLen = pColInfoData->varmeta.offset[n];

|

||||

if (-1 == newLen) {

|

||||

for (int i = n - 1; i >= 0; --i) {

|

||||

newLen = pColInfoData->varmeta.offset[i];

|

||||

if (newLen != -1) {

|

||||

if (pColInfoData->info.type == TSDB_DATA_TYPE_JSON) {

|

||||

newLen += getJsonValueLen(pColInfoData->pData + newLen);

|

||||

} else {

|

||||

newLen += varDataTLen(pColInfoData->pData + newLen);

|

||||

}

|

||||

break;

|

||||

}

|

||||

}

|

||||

}

|

||||

if (newLen <= -1) {

|

||||

uFatal("colDataKeepFirstNRows: newLen:%d old:%d", newLen, pColInfoData->varmeta.length);

|

||||

} else {

|

||||

pColInfoData->varmeta.length = newLen;

|

||||

}

|

||||

}

|

||||

// pColInfoData->varmeta.length = colDataMoveVarData(pColInfoData, 0, n);

|

||||

memset(&pColInfoData->varmeta.offset[n], 0, total - n);

|

||||

}

|

||||

|

|

|

|||

|

|

@ -58,7 +58,7 @@ int32_t tsNumOfMnodeQueryThreads = 4;

|

|||

int32_t tsNumOfMnodeFetchThreads = 1;

|

||||

int32_t tsNumOfMnodeReadThreads = 1;

|

||||

int32_t tsNumOfVnodeQueryThreads = 4;

|

||||

float tsRatioOfVnodeStreamThreads = 1.5F;

|

||||

float tsRatioOfVnodeStreamThreads = 0.5F;

|

||||

int32_t tsNumOfVnodeFetchThreads = 4;

|

||||

int32_t tsNumOfVnodeRsmaThreads = 2;

|

||||

int32_t tsNumOfQnodeQueryThreads = 4;

|

||||

|

|

@ -269,7 +269,6 @@ int64_t tsStreamBufferSize = 128 * 1024 * 1024;

|

|||

bool tsFilterScalarMode = false;

|

||||

int tsResolveFQDNRetryTime = 100; // seconds

|

||||

int tsStreamAggCnt = 1000;

|

||||

bool tsDisableCount = true;

|

||||

|

||||

char tsS3Endpoint[TSDB_FQDN_LEN] = "<endpoint>";

|

||||

char tsS3AccessKey[TSDB_FQDN_LEN] = "<accesskey>";

|

||||

|

|

@ -541,8 +540,6 @@ static int32_t taosAddClientCfg(SConfig *pCfg) {

|

|||

|

||||

if (cfgAddBool(pCfg, "monitor", tsEnableMonitor, CFG_SCOPE_SERVER, CFG_DYN_SERVER) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "monitorInterval", tsMonitorInterval, 1, 200000, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

|

||||

if (cfgAddBool(pCfg, "disableCount", tsDisableCount, CFG_SCOPE_CLIENT, CFG_DYN_CLIENT) != 0) return -1;

|

||||

return 0;

|

||||

}

|

||||

|

||||

|

|

@ -589,7 +586,7 @@ static int32_t taosAddServerCfg(SConfig *pCfg) {

|

|||

|

||||

tsNumOfSupportVnodes = tsNumOfCores * 2;

|

||||

tsNumOfSupportVnodes = TMAX(tsNumOfSupportVnodes, 2);

|

||||

if (cfgAddInt32(pCfg, "supportVnodes", tsNumOfSupportVnodes, 0, 4096, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "supportVnodes", tsNumOfSupportVnodes, 0, 4096, CFG_SCOPE_SERVER, CFG_DYN_ENT_SERVER) != 0) return -1;

|

||||

|

||||

if (cfgAddInt32(pCfg, "statusInterval", tsStatusInterval, 1, 30, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "minSlidingTime", tsMinSlidingTime, 1, 1000000, CFG_SCOPE_CLIENT, CFG_DYN_CLIENT) != 0)

|

||||

|

|

@ -705,7 +702,7 @@ static int32_t taosAddServerCfg(SConfig *pCfg) {

|

|||

if (cfgAddInt32(pCfg, "monitorIntervalForBasic", tsMonitorIntervalForBasic, 1, 200000, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0)

|

||||

return -1;

|

||||

if (cfgAddBool(pCfg, "monitorForceV2", tsMonitorForceV2, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

|

||||

|

||||

if (cfgAddBool(pCfg, "audit", tsEnableAudit, CFG_SCOPE_SERVER, CFG_DYN_ENT_SERVER) != 0) return -1;

|

||||

if (cfgAddBool(pCfg, "auditCreateTable", tsEnableAuditCreateTable, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "auditInterval", tsAuditInterval, 500, 200000, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

|

|

@ -1109,8 +1106,6 @@ static int32_t taosSetClientCfg(SConfig *pCfg) {

|

|||

tsKeepAliveIdle = cfgGetItem(pCfg, "keepAliveIdle")->i32;

|

||||

|

||||

tsExperimental = cfgGetItem(pCfg, "experimental")->bval;

|

||||

|

||||

tsDisableCount = cfgGetItem(pCfg, "disableCount")->bval;

|

||||

return 0;

|

||||

}

|

||||

|

||||

|

|

@ -1174,7 +1169,7 @@ static int32_t taosSetServerCfg(SConfig *pCfg) {

|

|||

tsMonitorLogProtocol = cfgGetItem(pCfg, "monitorLogProtocol")->bval;

|

||||

tsMonitorIntervalForBasic = cfgGetItem(pCfg, "monitorIntervalForBasic")->i32;

|

||||

tsMonitorForceV2 = cfgGetItem(pCfg, "monitorForceV2")->i32;

|

||||

|

||||

|

||||

tsEnableAudit = cfgGetItem(pCfg, "audit")->bval;

|

||||

tsEnableAuditCreateTable = cfgGetItem(pCfg, "auditCreateTable")->bval;

|

||||

tsAuditInterval = cfgGetItem(pCfg, "auditInterval")->i32;

|

||||

|

|

@ -1263,6 +1258,8 @@ static int32_t taosSetReleaseCfg(SConfig *pCfg) { return 0; }

|

|||

int32_t taosSetReleaseCfg(SConfig *pCfg);

|

||||

#endif

|

||||

|

||||

static void taosSetAllDebugFlag(SConfig *pCfg, int32_t flag);

|

||||

|

||||

int32_t taosCreateLog(const char *logname, int32_t logFileNum, const char *cfgDir, const char **envCmd,

|

||||

const char *envFile, char *apolloUrl, SArray *pArgs, bool tsc) {

|

||||

if (tsCfg == NULL) osDefaultInit();

|

||||

|

|

@ -1307,7 +1304,7 @@ int32_t taosCreateLog(const char *logname, int32_t logFileNum, const char *cfgDi

|

|||

taosSetServerLogCfg(pCfg);

|

||||

}

|

||||

|

||||

taosSetAllDebugFlag(cfgGetItem(pCfg, "debugFlag")->i32);

|

||||

taosSetAllDebugFlag(pCfg, cfgGetItem(pCfg, "debugFlag")->i32);

|

||||

|

||||

if (taosMulModeMkDir(tsLogDir, 0777, true) != 0) {

|

||||

terrno = TAOS_SYSTEM_ERROR(errno);

|

||||

|

|

@ -1356,6 +1353,7 @@ int32_t taosInitCfg(const char *cfgDir, const char **envCmd, const char *envFile

|

|||

if (taosAddClientLogCfg(tsCfg) != 0) return -1;

|

||||

if (taosAddServerLogCfg(tsCfg) != 0) return -1;

|

||||

}

|

||||

|

||||

taosAddSystemCfg(tsCfg);

|

||||

|

||||

if (taosLoadCfg(tsCfg, envCmd, cfgDir, envFile, apolloUrl) != 0) {

|

||||

|

|

@ -1382,10 +1380,12 @@ int32_t taosInitCfg(const char *cfgDir, const char **envCmd, const char *envFile

|

|||

if (taosSetTfsCfg(tsCfg) != 0) return -1;

|

||||

if (taosSetS3Cfg(tsCfg) != 0) return -1;

|

||||

}

|

||||

|

||||

taosSetSystemCfg(tsCfg);

|

||||

|

||||

if (taosSetFileHandlesLimit() != 0) return -1;

|

||||

|

||||

taosSetAllDebugFlag(cfgGetItem(tsCfg, "debugFlag")->i32);

|

||||

taosSetAllDebugFlag(tsCfg, cfgGetItem(tsCfg, "debugFlag")->i32);

|

||||

|

||||

cfgDumpCfg(tsCfg, tsc, false);

|

||||

|

||||

|

|

@ -1478,7 +1478,7 @@ static int32_t taosCfgDynamicOptionsForServer(SConfig *pCfg, char *name) {

|

|||

}

|

||||

|

||||

if (strncasecmp(name, "debugFlag", 9) == 0) {

|

||||

taosSetAllDebugFlag(pItem->i32);

|

||||

taosSetAllDebugFlag(pCfg, pItem->i32);

|

||||

return 0;

|

||||

}

|

||||

|

||||

|

|

@ -1552,7 +1552,7 @@ static int32_t taosCfgDynamicOptionsForClient(SConfig *pCfg, char *name) {

|

|||

switch (lowcaseName[0]) {

|

||||

case 'd': {

|

||||

if (strcasecmp("debugFlag", name) == 0) {

|

||||

taosSetAllDebugFlag(pItem->i32);

|

||||

taosSetAllDebugFlag(pCfg, pItem->i32);

|

||||

matched = true;

|

||||

}

|

||||

break;

|

||||

|

|

@ -1737,8 +1737,7 @@ static int32_t taosCfgDynamicOptionsForClient(SConfig *pCfg, char *name) {

|

|||

{"shellActivityTimer", &tsShellActivityTimer},

|

||||

{"slowLogThreshold", &tsSlowLogThreshold},

|

||||

{"useAdapter", &tsUseAdapter},

|

||||

{"experimental", &tsExperimental},

|

||||

{"disableCount", &tsDisableCount}};

|

||||

{"experimental", &tsExperimental}};

|

||||

|

||||

if (taosCfgSetOption(debugOptions, tListLen(debugOptions), pItem, true) != 0) {

|

||||

taosCfgSetOption(options, tListLen(options), pItem, false);

|

||||

|

|

@ -1777,11 +1776,13 @@ static void taosCheckAndSetDebugFlag(int32_t *pFlagPtr, char *name, int32_t flag

|

|||

taosSetDebugFlag(pFlagPtr, name, flag);

|

||||

}

|

||||

|

||||

void taosSetAllDebugFlag(int32_t flag) {

|

||||

void taosSetGlobalDebugFlag(int32_t flag) { taosSetAllDebugFlag(tsCfg, flag); }

|

||||

|

||||

static void taosSetAllDebugFlag(SConfig *pCfg, int32_t flag) {

|

||||

if (flag <= 0) return;

|

||||

|

||||

SArray *noNeedToSetVars = NULL;

|

||||

SConfigItem *pItem = cfgGetItem(tsCfg, "debugFlag");

|

||||

SConfigItem *pItem = cfgGetItem(pCfg, "debugFlag");

|

||||

if (pItem != NULL) {

|

||||

pItem->i32 = flag;

|

||||

noNeedToSetVars = pItem->array;

|

||||

|

|

@ -1831,4 +1832,4 @@ int8_t taosGranted(int8_t type) {

|

|||

break;

|

||||

}

|

||||

return 0;

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -1009,19 +1009,19 @@ int32_t tDeserializeSCreateTagIdxReq(void *buf, int32_t bufLen, SCreateTagIndexR

|

|||

tDecoderClear(&decoder);

|

||||

return 0;

|

||||

}

|

||||

int32_t tSerializeSDropTagIdxReq(void *buf, int32_t bufLen, SDropTagIndexReq *pReq) {

|

||||

SEncoder encoder = {0};

|

||||

tEncoderInit(&encoder, buf, bufLen);

|

||||

if (tStartEncode(&encoder) < 0) return -1;

|

||||

tEndEncode(&encoder);

|

||||

// int32_t tSerializeSDropTagIdxReq(void *buf, int32_t bufLen, SDropTagIndexReq *pReq) {

|

||||

// SEncoder encoder = {0};

|

||||

// tEncoderInit(&encoder, buf, bufLen);

|

||||

// if (tStartEncode(&encoder) < 0) return -1;

|

||||

// tEndEncode(&encoder);

|

||||

|

||||

if (tEncodeCStr(&encoder, pReq->name) < 0) return -1;

|

||||

if (tEncodeI8(&encoder, pReq->igNotExists) < 0) return -1;

|

||||

// if (tEncodeCStr(&encoder, pReq->name) < 0) return -1;

|

||||

// if (tEncodeI8(&encoder, pReq->igNotExists) < 0) return -1;

|

||||

|

||||

int32_t tlen = encoder.pos;

|

||||

tEncoderClear(&encoder);

|

||||

return tlen;

|

||||

}

|

||||

// int32_t tlen = encoder.pos;

|

||||

// tEncoderClear(&encoder);

|

||||

// return tlen;

|

||||

// }

|

||||

int32_t tDeserializeSDropTagIdxReq(void *buf, int32_t bufLen, SDropTagIndexReq *pReq) {

|

||||

SDecoder decoder = {0};

|

||||

tDecoderInit(&decoder, buf, bufLen);

|

||||

|

|

@ -1035,6 +1035,7 @@ int32_t tDeserializeSDropTagIdxReq(void *buf, int32_t bufLen, SDropTagIndexReq *

|

|||

|

||||

return 0;

|

||||

}

|

||||

|

||||

int32_t tSerializeSMCreateFullTextReq(void *buf, int32_t bufLen, SMCreateFullTextReq *pReq) {

|

||||

SEncoder encoder = {0};

|

||||

tEncoderInit(&encoder, buf, bufLen);

|

||||

|

|

@ -1059,32 +1060,32 @@ void tFreeSMCreateFullTextReq(SMCreateFullTextReq *pReq) {

|

|||

// impl later

|

||||

return;

|

||||

}

|

||||

int32_t tSerializeSMDropFullTextReq(void *buf, int32_t bufLen, SMDropFullTextReq *pReq) {

|

||||

SEncoder encoder = {0};

|

||||

tEncoderInit(&encoder, buf, bufLen);

|

||||

// int32_t tSerializeSMDropFullTextReq(void *buf, int32_t bufLen, SMDropFullTextReq *pReq) {

|

||||

// SEncoder encoder = {0};

|

||||

// tEncoderInit(&encoder, buf, bufLen);

|

||||

|

||||

if (tStartEncode(&encoder) < 0) return -1;

|

||||

// if (tStartEncode(&encoder) < 0) return -1;

|

||||

|

||||

if (tEncodeCStr(&encoder, pReq->name) < 0) return -1;

|

||||

// if (tEncodeCStr(&encoder, pReq->name) < 0) return -1;

|

||||

|

||||

if (tEncodeI8(&encoder, pReq->igNotExists) < 0) return -1;

|

||||

// if (tEncodeI8(&encoder, pReq->igNotExists) < 0) return -1;

|

||||

|

||||

tEndEncode(&encoder);

|

||||

int32_t tlen = encoder.pos;

|

||||

tEncoderClear(&encoder);

|

||||

return tlen;

|

||||

}

|

||||

int32_t tDeserializeSMDropFullTextReq(void *buf, int32_t bufLen, SMDropFullTextReq *pReq) {

|

||||

SDecoder decoder = {0};

|

||||

tDecoderInit(&decoder, buf, bufLen);

|

||||

if (tStartDecode(&decoder) < 0) return -1;

|

||||

if (tDecodeCStrTo(&decoder, pReq->name) < 0) return -1;

|

||||

if (tDecodeI8(&decoder, &pReq->igNotExists) < 0) return -1;

|

||||

// tEndEncode(&encoder);

|

||||

// int32_t tlen = encoder.pos;

|

||||

// tEncoderClear(&encoder);

|

||||

// return tlen;

|

||||

// }

|

||||

// int32_t tDeserializeSMDropFullTextReq(void *buf, int32_t bufLen, SMDropFullTextReq *pReq) {

|

||||

// SDecoder decoder = {0};

|

||||

// tDecoderInit(&decoder, buf, bufLen);

|

||||

// if (tStartDecode(&decoder) < 0) return -1;

|

||||

// if (tDecodeCStrTo(&decoder, pReq->name) < 0) return -1;

|

||||

// if (tDecodeI8(&decoder, &pReq->igNotExists) < 0) return -1;

|

||||

|

||||

tEndDecode(&decoder);

|

||||

tDecoderClear(&decoder);

|

||||

return 0;

|

||||

}

|

||||

// tEndDecode(&decoder);

|

||||

// tDecoderClear(&decoder);

|

||||

// return 0;

|

||||

// }

|

||||

|

||||

int32_t tSerializeSNotifyReq(void *buf, int32_t bufLen, SNotifyReq *pReq) {

|

||||

SEncoder encoder = {0};

|

||||

|

|