Merge branch '3.0' of https://github.com/taosdata/TDengine into fix/TD-28627

This commit is contained in:

commit

9de0643566

|

|

@ -24,7 +24,7 @@ SELECT [hints] [DISTINCT] [TAGS] select_list

|

|||

hints: /*+ [hint([hint_param_list])] [hint([hint_param_list])] */

|

||||

|

||||

hint:

|

||||

BATCH_SCAN | NO_BATCH_SCAN | SORT_FOR_GROUP | PARA_TABLES_SORT

|

||||

BATCH_SCAN | NO_BATCH_SCAN | SORT_FOR_GROUP | PARA_TABLES_SORT | PARTITION_FIRST | SMALLDATA_TS_SORT

|

||||

|

||||

select_list:

|

||||

select_expr [, select_expr] ...

|

||||

|

|

@ -94,6 +94,7 @@ The list of currently supported Hints is as follows:

|

|||

| SORT_FOR_GROUP| None | Use sort for partition, conflict with PARTITION_FIRST | With normal column in partition by list |

|

||||

| PARTITION_FIRST| None | Use Partition before aggregate, conflict with SORT_FOR_GROUP | With normal column in partition by list |

|

||||

| PARA_TABLES_SORT| None | When sorting the supertable rows by timestamp, No temporary disk space is used. When there are numerous tables, each with long rows, the corresponding algorithm associated with this prompt may consume a substantial amount of memory, potentially leading to an Out Of Memory (OOM) situation. | Sorting the supertable rows by timestamp |

|

||||

| SMALLDATA_TS_SORT| None | When sorting the supertable rows by timestamp, if the length of query columns >= 256, and there are relatively few rows, this hint can improve performance. | Sorting the supertable rows by timestamp |

|

||||

|

||||

For example:

|

||||

|

||||

|

|

@ -102,6 +103,7 @@ SELECT /*+ BATCH_SCAN() */ a.ts FROM stable1 a, stable2 b where a.tag0 = b.tag0

|

|||

SELECT /*+ SORT_FOR_GROUP() */ count(*), c1 FROM stable1 PARTITION BY c1;

|

||||

SELECT /*+ PARTITION_FIRST() */ count(*), c1 FROM stable1 PARTITION BY c1;

|

||||

SELECT /*+ PARA_TABLES_SORT() */ * from stable1 order by ts;

|

||||

SELECT /*+ SMALLDATA_TS_SORT() */ * from stable1 order by ts;

|

||||

```

|

||||

|

||||

## Lists

|

||||

|

|

|

|||

|

|

@ -24,7 +24,7 @@ SELECT [hints] [DISTINCT] [TAGS] select_list

|

|||

hints: /*+ [hint([hint_param_list])] [hint([hint_param_list])] */

|

||||

|

||||

hint:

|

||||

BATCH_SCAN | NO_BATCH_SCAN | SORT_FOR_GROUP | PARA_TABLES_SORT

|

||||

BATCH_SCAN | NO_BATCH_SCAN | SORT_FOR_GROUP | PARTITION_FIRST | PARA_TABLES_SORT | SMALLDATA_TS_SORT

|

||||

|

||||

select_list:

|

||||

select_expr [, select_expr] ...

|

||||

|

|

@ -94,6 +94,8 @@ Hints 是用户控制单个语句查询优化的一种手段,当 Hint 不适

|

|||

| SORT_FOR_GROUP| 无 | 采用sort方式进行分组, 与PARTITION_FIRST冲突 | partition by 列表有普通列时 |

|

||||

| PARTITION_FIRST| 无 | 在聚合之前使用PARTITION计算分组, 与SORT_FOR_GROUP冲突 | partition by 列表有普通列时 |

|

||||

| PARA_TABLES_SORT| 无 | 超级表的数据按时间戳排序时, 不使用临时磁盘空间, 只使用内存。当子表数量多, 行长比较大时候, 会使用大量内存, 可能发生OOM | 超级表的数据按时间戳排序时 |

|

||||

| SMALLDATA_TS_SORT| 无 | 超级表的数据按时间戳排序时, 查询列长度大于等于256, 但是行数不多, 使用这个提示, 可以提高性能 | 超级表的数据按时间戳排序时 |

|

||||

|

||||

举例:

|

||||

|

||||

```sql

|

||||

|

|

@ -101,6 +103,7 @@ SELECT /*+ BATCH_SCAN() */ a.ts FROM stable1 a, stable2 b where a.tag0 = b.tag0

|

|||

SELECT /*+ SORT_FOR_GROUP() */ count(*), c1 FROM stable1 PARTITION BY c1;

|

||||

SELECT /*+ PARTITION_FIRST() */ count(*), c1 FROM stable1 PARTITION BY c1;

|

||||

SELECT /*+ PARA_TABLES_SORT() */ * from stable1 order by ts;

|

||||

SELECT /*+ SMALLDATA_TS_SORT() */ * from stable1 order by ts;

|

||||

```

|

||||

|

||||

## 列表

|

||||

|

|

|

|||

|

|

@ -49,6 +49,7 @@ window_clause: {

|

|||

| STATE_WINDOW(col)

|

||||

| INTERVAL(interval_val [, interval_offset]) [SLIDING (sliding_val)] [FILL(fill_mod_and_val)]

|

||||

| EVENT_WINDOW START WITH start_trigger_condition END WITH end_trigger_condition

|

||||

| COUNT_WINDOW(count_val[, sliding_val])

|

||||

}

|

||||

```

|

||||

|

||||

|

|

@ -180,6 +181,19 @@ select _wstart, _wend, count(*) from t event_window start with c1 > 0 end with c

|

|||

|

||||

|

||||

|

||||

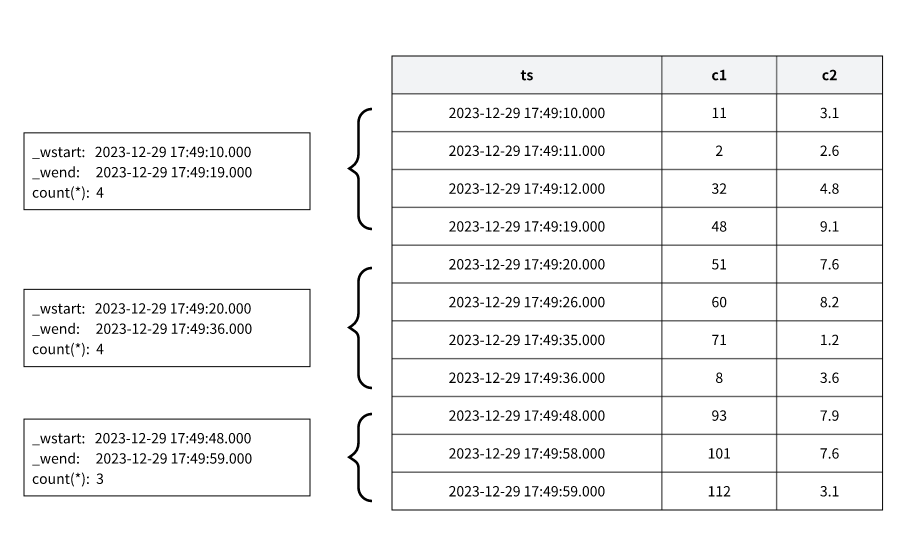

### 计数窗口

|

||||

|

||||

计数窗口按固定的数据行数来划分窗口。默认将数据按时间戳排序,再按照count_val的值,将数据划分为多个窗口,然后做聚合计算。count_val表示每个count window包含的最大数据行数,总数据行数不能整除count_val时,最后一个窗口的行数会小于count_val。sliding_val是常量,表示窗口滑动的数量,类似于 interval的SLIDING。

|

||||

|

||||

以下面的 SQL 语句为例,计数窗口切分如图所示:

|

||||

```sql

|

||||

select _wstart, _wend, count(*) from t count_window(4);

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 时间戳伪列

|

||||

|

||||

窗口聚合查询结果中,如果 SQL 语句中没有指定输出查询结果中的时间戳列,那么最终结果中不会自动包含窗口的时间列信息。如果需要在结果中输出聚合结果所对应的时间窗口信息,需要在 SELECT 子句中使用时间戳相关的伪列: 时间窗口起始时间 (\_WSTART), 时间窗口结束时间 (\_WEND), 时间窗口持续时间 (\_WDURATION), 以及查询整体窗口相关的伪列: 查询窗口起始时间(\_QSTART) 和查询窗口结束时间(\_QEND)。需要注意的是时间窗口起始时间和结束时间均是闭区间,时间窗口持续时间是数据当前时间分辨率下的数值。例如,如果当前数据库的时间分辨率是毫秒,那么结果中 500 就表示当前时间窗口的持续时间是 500毫秒 (500 ms)。

|

||||

|

|

|

|||

|

|

@ -3820,6 +3820,7 @@ typedef struct {

|

|||

uint32_t phyLen;

|

||||

char* sql;

|

||||

char* msg;

|

||||

int8_t source;

|

||||

} SVDeleteReq;

|

||||

|

||||

int32_t tSerializeSVDeleteReq(void* buf, int32_t bufLen, SVDeleteReq* pReq);

|

||||

|

|

@ -3841,6 +3842,7 @@ typedef struct SDeleteRes {

|

|||

char tableFName[TSDB_TABLE_NAME_LEN];

|

||||

char tsColName[TSDB_COL_NAME_LEN];

|

||||

int64_t ctimeMs; // fill by vnode

|

||||

int8_t source;

|

||||

} SDeleteRes;

|

||||

|

||||

int32_t tEncodeDeleteRes(SEncoder* pCoder, const SDeleteRes* pRes);

|

||||

|

|

|

|||

|

|

@ -380,7 +380,7 @@

|

|||

#define TK_SORT_FOR_GROUP 608

|

||||

#define TK_PARTITION_FIRST 609

|

||||

#define TK_PARA_TABLES_SORT 610

|

||||

|

||||

#define TK_SMALLDATA_TS_SORT 611

|

||||

|

||||

#define TK_NK_NIL 65535

|

||||

|

||||

|

|

|

|||

|

|

@ -223,10 +223,10 @@ typedef struct SStoreTqReader {

|

|||

bool (*tqReaderCurrentBlockConsumed)();

|

||||

|

||||

struct SWalReader* (*tqReaderGetWalReader)(); // todo remove it

|

||||

int32_t (*tqReaderRetrieveTaosXBlock)(); // todo remove it

|

||||

// int32_t (*tqReaderRetrieveTaosXBlock)(); // todo remove it

|

||||

|

||||

int32_t (*tqReaderSetSubmitMsg)(); // todo remove it

|

||||

bool (*tqReaderNextBlockFilterOut)();

|

||||

// bool (*tqReaderNextBlockFilterOut)();

|

||||

} SStoreTqReader;

|

||||

|

||||

typedef struct SStoreSnapshotFn {

|

||||

|

|

|

|||

|

|

@ -122,6 +122,7 @@ typedef struct SScanLogicNode {

|

|||

bool isCountByTag; // true if selectstmt hasCountFunc & part by tag/tbname

|

||||

SArray* pFuncTypes; // for last, last_row

|

||||

bool paraTablesSort; // for table merge scan

|

||||

bool smallDataTsSort; // disable row id sort for table merge scan

|

||||

} SScanLogicNode;

|

||||

|

||||

typedef struct SJoinLogicNode {

|

||||

|

|

@ -445,6 +446,7 @@ typedef struct STableScanPhysiNode {

|

|||

bool filesetDelimited;

|

||||

bool needCountEmptyTable;

|

||||

bool paraTablesSort;

|

||||

bool smallDataTsSort;

|

||||

} STableScanPhysiNode;

|

||||

|

||||

typedef STableScanPhysiNode STableSeqScanPhysiNode;

|

||||

|

|

|

|||

|

|

@ -128,7 +128,8 @@ typedef enum EHintOption {

|

|||

HINT_BATCH_SCAN,

|

||||

HINT_SORT_FOR_GROUP,

|

||||

HINT_PARTITION_FIRST,

|

||||

HINT_PARA_TABLES_SORT

|

||||

HINT_PARA_TABLES_SORT,

|

||||

HINT_SMALLDATA_TS_SORT,

|

||||

} EHintOption;

|

||||

|

||||

typedef struct SHintNode {

|

||||

|

|

|

|||

|

|

@ -78,6 +78,7 @@ typedef struct SSchedulerReq {

|

|||

void* chkKillParam;

|

||||

SExecResult* pExecRes;

|

||||

void** pFetchRes;

|

||||

int8_t source;

|

||||

} SSchedulerReq;

|

||||

|

||||

int32_t schedulerInit(void);

|

||||

|

|

|

|||

|

|

@ -56,7 +56,6 @@ extern "C" {

|

|||

#define STREAM_EXEC_T_RESTART_ALL_TASKS (-4)

|

||||

#define STREAM_EXEC_T_STOP_ALL_TASKS (-5)

|

||||

#define STREAM_EXEC_T_RESUME_TASK (-6)

|

||||

#define STREAM_EXEC_T_UPDATE_TASK_EPSET (-7)

|

||||

|

||||

typedef struct SStreamTask SStreamTask;

|

||||

typedef struct SStreamQueue SStreamQueue;

|

||||

|

|

@ -783,11 +782,14 @@ bool streamTaskIsAllUpstreamClosed(SStreamTask* pTask);

|

|||

bool streamTaskSetSchedStatusWait(SStreamTask* pTask);

|

||||

int8_t streamTaskSetSchedStatusActive(SStreamTask* pTask);

|

||||

int8_t streamTaskSetSchedStatusInactive(SStreamTask* pTask);

|

||||

int32_t streamTaskClearHTaskAttr(SStreamTask* pTask, int32_t clearRelHalt, bool metaLock);

|

||||

int32_t streamTaskClearHTaskAttr(SStreamTask* pTask, int32_t clearRelHalt);

|

||||

|

||||

int32_t streamTaskHandleEvent(SStreamTaskSM* pSM, EStreamTaskEvent event);

|

||||

int32_t streamTaskOnHandleEventSuccess(SStreamTaskSM* pSM, EStreamTaskEvent event);

|

||||

void streamTaskRestoreStatus(SStreamTask* pTask);

|

||||

|

||||

typedef int32_t (*__state_trans_user_fn)(SStreamTask*, void* param);

|

||||

int32_t streamTaskHandleEventAsync(SStreamTaskSM* pSM, EStreamTaskEvent event, __state_trans_user_fn callbackFn, void* param);

|

||||

int32_t streamTaskOnHandleEventSuccess(SStreamTaskSM* pSM, EStreamTaskEvent event, __state_trans_user_fn callbackFn, void* param);

|

||||

int32_t streamTaskRestoreStatus(SStreamTask* pTask);

|

||||

|

||||

int32_t streamSendCheckRsp(const SStreamMeta* pMeta, const SStreamTaskCheckReq* pReq, SStreamTaskCheckRsp* pRsp,

|

||||

SRpcHandleInfo* pRpcInfo, int32_t taskId);

|

||||

|

|

|

|||

|

|

@ -62,6 +62,7 @@ typedef struct SRpcHandleInfo {

|

|||

|

||||

SRpcConnInfo conn;

|

||||

int8_t forbiddenIp;

|

||||

int8_t notFreeAhandle;

|

||||

|

||||

} SRpcHandleInfo;

|

||||

|

||||

|

|

|

|||

|

|

@ -119,6 +119,13 @@ int32_t taosSetFileHandlesLimit();

|

|||

|

||||

int32_t taosLinkFile(char *src, char *dst);

|

||||

|

||||

FILE* taosOpenCFile(const char* filename, const char* mode);

|

||||

int taosSeekCFile(FILE* file, int64_t offset, int whence);

|

||||

size_t taosReadFromCFile(void *buffer, size_t size, size_t count, FILE *stream );

|

||||

size_t taosWriteToCFile(const void* ptr, size_t size, size_t nitems, FILE* stream);

|

||||

int taosCloseCFile(FILE *);

|

||||

int taosSetAutoDelFile(char* path);

|

||||

|

||||

bool lastErrorIsFileNotExist();

|

||||

|

||||

#ifdef __cplusplus

|

||||

|

|

|

|||

|

|

@ -284,6 +284,7 @@ typedef struct SRequestObj {

|

|||

void* pWrapper;

|

||||

SMetaData parseMeta;

|

||||

char* effectiveUser;

|

||||

int8_t source;

|

||||

} SRequestObj;

|

||||

|

||||

typedef struct SSyncQueryParam {

|

||||

|

|

@ -297,8 +298,7 @@ void* doFetchRows(SRequestObj* pRequest, bool setupOneRowPtr, bool convertUcs4);

|

|||

|

||||

void doSetOneRowPtr(SReqResultInfo* pResultInfo);

|

||||

void setResPrecision(SReqResultInfo* pResInfo, int32_t precision);

|

||||

int32_t setQueryResultFromRsp(SReqResultInfo* pResultInfo, const SRetrieveTableRsp* pRsp, bool convertUcs4,

|

||||

bool freeAfterUse);

|

||||

int32_t setQueryResultFromRsp(SReqResultInfo* pResultInfo, const SRetrieveTableRsp* pRsp, bool convertUcs4);

|

||||

int32_t setResultDataPtr(SReqResultInfo* pResultInfo, TAOS_FIELD* pFields, int32_t numOfCols, int32_t numOfRows,

|

||||

bool convertUcs4);

|

||||

void setResSchemaInfo(SReqResultInfo* pResInfo, const SSchema* pSchema, int32_t numOfCols);

|

||||

|

|

@ -306,10 +306,10 @@ void doFreeReqResultInfo(SReqResultInfo* pResInfo);

|

|||

int32_t transferTableNameList(const char* tbList, int32_t acctId, char* dbName, SArray** pReq);

|

||||

void syncCatalogFn(SMetaData* pResult, void* param, int32_t code);

|

||||

|

||||

TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly);

|

||||

TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly, int8_t source);

|

||||

TAOS_RES* taosQueryImplWithReqid(TAOS* taos, const char* sql, bool validateOnly, int64_t reqid);

|

||||

|

||||

void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly);

|

||||

void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly, int8_t source);

|

||||

void taosAsyncQueryImplWithReqid(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly,

|

||||

int64_t reqid);

|

||||

void taosAsyncFetchImpl(SRequestObj *pRequest, __taos_async_fn_t fp, void *param);

|

||||

|

|

@ -354,6 +354,7 @@ SRequestObj* acquireRequest(int64_t rid);

|

|||

int32_t releaseRequest(int64_t rid);

|

||||

int32_t removeRequest(int64_t rid);

|

||||

void doDestroyRequest(void* p);

|

||||

int64_t removeFromMostPrevReq(SRequestObj* pRequest);

|

||||

|

||||

char* getDbOfConnection(STscObj* pObj);

|

||||

void setConnectionDB(STscObj* pTscObj, const char* db);

|

||||

|

|

|

|||

|

|

@ -385,6 +385,33 @@ int32_t releaseRequest(int64_t rid) { return taosReleaseRef(clientReqRefPool, ri

|

|||

|

||||

int32_t removeRequest(int64_t rid) { return taosRemoveRef(clientReqRefPool, rid); }

|

||||

|

||||

/// return the most previous req ref id

|

||||

int64_t removeFromMostPrevReq(SRequestObj* pRequest) {

|

||||

int64_t mostPrevReqRefId = pRequest->self;

|

||||

SRequestObj* pTmp = pRequest;

|

||||

while (pTmp->relation.prevRefId) {

|

||||

pTmp = acquireRequest(pTmp->relation.prevRefId);

|

||||

if (pTmp) {

|

||||

mostPrevReqRefId = pTmp->self;

|

||||

releaseRequest(mostPrevReqRefId);

|

||||

} else {

|

||||

break;

|

||||

}

|

||||

}

|

||||

removeRequest(mostPrevReqRefId);

|

||||

return mostPrevReqRefId;

|

||||

}

|

||||

|

||||

void destroyNextReq(int64_t nextRefId) {

|

||||

if (nextRefId) {

|

||||

SRequestObj* pObj = acquireRequest(nextRefId);

|

||||

if (pObj) {

|

||||

releaseRequest(nextRefId);

|

||||

releaseRequest(nextRefId);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

void destroySubRequests(SRequestObj *pRequest) {

|

||||

int32_t reqIdx = -1;

|

||||

SRequestObj *pReqList[16] = {NULL};

|

||||

|

|

@ -435,7 +462,7 @@ void doDestroyRequest(void *p) {

|

|||

uint64_t reqId = pRequest->requestId;

|

||||

tscTrace("begin to destroy request %" PRIx64 " p:%p", reqId, pRequest);

|

||||

|

||||

destroySubRequests(pRequest);

|

||||

int64_t nextReqRefId = pRequest->relation.nextRefId;

|

||||

|

||||

taosHashRemove(pRequest->pTscObj->pRequests, &pRequest->self, sizeof(pRequest->self));

|

||||

|

||||

|

|

@ -471,6 +498,7 @@ void doDestroyRequest(void *p) {

|

|||

taosMemoryFreeClear(pRequest->sqlstr);

|

||||

taosMemoryFree(pRequest);

|

||||

tscTrace("end to destroy request %" PRIx64 " p:%p", reqId, pRequest);

|

||||

destroyNextReq(nextReqRefId);

|

||||

}

|

||||

|

||||

void destroyRequest(SRequestObj *pRequest) {

|

||||

|

|

@ -479,7 +507,7 @@ void destroyRequest(SRequestObj *pRequest) {

|

|||

}

|

||||

|

||||

taos_stop_query(pRequest);

|

||||

removeRequest(pRequest->self);

|

||||

removeFromMostPrevReq(pRequest);

|

||||

}

|

||||

|

||||

void taosStopQueryImpl(SRequestObj *pRequest) {

|

||||

|

|

|

|||

|

|

@ -302,7 +302,7 @@ int32_t execLocalCmd(SRequestObj* pRequest, SQuery* pQuery) {

|

|||

int8_t biMode = atomic_load_8(&pRequest->pTscObj->biMode);

|

||||

int32_t code = qExecCommand(&pRequest->pTscObj->id, pRequest->pTscObj->sysInfo, pQuery->pRoot, &pRsp, biMode);

|

||||

if (TSDB_CODE_SUCCESS == code && NULL != pRsp) {

|

||||

code = setQueryResultFromRsp(&pRequest->body.resInfo, pRsp, false, true);

|

||||

code = setQueryResultFromRsp(&pRequest->body.resInfo, pRsp, false);

|

||||

}

|

||||

|

||||

return code;

|

||||

|

|

@ -341,7 +341,7 @@ void asyncExecLocalCmd(SRequestObj* pRequest, SQuery* pQuery) {

|

|||

int32_t code = qExecCommand(&pRequest->pTscObj->id, pRequest->pTscObj->sysInfo, pQuery->pRoot, &pRsp,

|

||||

atomic_load_8(&pRequest->pTscObj->biMode));

|

||||

if (TSDB_CODE_SUCCESS == code && NULL != pRsp) {

|

||||

code = setQueryResultFromRsp(&pRequest->body.resInfo, pRsp, false, true);

|

||||

code = setQueryResultFromRsp(&pRequest->body.resInfo, pRsp, false);

|

||||

}

|

||||

|

||||

SReqResultInfo* pResultInfo = &pRequest->body.resInfo;

|

||||

|

|

@ -743,6 +743,7 @@ int32_t scheduleQuery(SRequestObj* pRequest, SQueryPlan* pDag, SArray* pNodeList

|

|||

.chkKillFp = chkRequestKilled,

|

||||

.chkKillParam = (void*)pRequest->self,

|

||||

.pExecRes = &res,

|

||||

.source = pRequest->source,

|

||||

};

|

||||

|

||||

int32_t code = schedulerExecJob(&req, &pRequest->body.queryJob);

|

||||

|

|

@ -1212,6 +1213,7 @@ static int32_t asyncExecSchQuery(SRequestObj* pRequest, SQuery* pQuery, SMetaDat

|

|||

.chkKillFp = chkRequestKilled,

|

||||

.chkKillParam = (void*)pRequest->self,

|

||||

.pExecRes = NULL,

|

||||

.source = pRequest->source,

|

||||

};

|

||||

code = schedulerExecJob(&req, &pRequest->body.queryJob);

|

||||

taosArrayDestroy(pNodeList);

|

||||

|

|

@ -1719,7 +1721,7 @@ void* doFetchRows(SRequestObj* pRequest, bool setupOneRowPtr, bool convertUcs4)

|

|||

}

|

||||

|

||||

pRequest->code =

|

||||

setQueryResultFromRsp(&pRequest->body.resInfo, (const SRetrieveTableRsp*)pResInfo->pData, convertUcs4, true);

|

||||

setQueryResultFromRsp(&pRequest->body.resInfo, (const SRetrieveTableRsp*)pResInfo->pData, convertUcs4);

|

||||

if (pRequest->code != TSDB_CODE_SUCCESS) {

|

||||

pResultInfo->numOfRows = 0;

|

||||

return NULL;

|

||||

|

|

@ -2178,15 +2180,13 @@ void resetConnectDB(STscObj* pTscObj) {

|

|||

taosThreadMutexUnlock(&pTscObj->mutex);

|

||||

}

|

||||

|

||||

int32_t setQueryResultFromRsp(SReqResultInfo* pResultInfo, const SRetrieveTableRsp* pRsp, bool convertUcs4,

|

||||

bool freeAfterUse) {

|

||||

int32_t setQueryResultFromRsp(SReqResultInfo* pResultInfo, const SRetrieveTableRsp* pRsp, bool convertUcs4) {

|

||||

if (pResultInfo == NULL || pRsp == NULL) {

|

||||

tscError("setQueryResultFromRsp paras is null");

|

||||

return TSDB_CODE_TSC_INTERNAL_ERROR;

|

||||

}

|

||||

|

||||

if (freeAfterUse) taosMemoryFreeClear(pResultInfo->pRspMsg);

|

||||

|

||||

taosMemoryFreeClear(pResultInfo->pRspMsg);

|

||||

pResultInfo->pRspMsg = (const char*)pRsp;

|

||||

pResultInfo->pData = (void*)pRsp->data;

|

||||

pResultInfo->numOfRows = htobe64(pRsp->numOfRows);

|

||||

|

|

@ -2475,7 +2475,7 @@ void syncQueryFn(void* param, void* res, int32_t code) {

|

|||

tsem_post(&pParam->sem);

|

||||

}

|

||||

|

||||

void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly) {

|

||||

void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp, void* param, bool validateOnly, int8_t source) {

|

||||

if (sql == NULL || NULL == fp) {

|

||||

terrno = TSDB_CODE_INVALID_PARA;

|

||||

if (fp) {

|

||||

|

|

@ -2501,6 +2501,7 @@ void taosAsyncQueryImpl(uint64_t connId, const char* sql, __taos_async_fn_t fp,

|

|||

return;

|

||||

}

|

||||

|

||||

pRequest->source = source;

|

||||

pRequest->body.queryFp = fp;

|

||||

doAsyncQuery(pRequest, false);

|

||||

}

|

||||

|

|

@ -2535,7 +2536,7 @@ void taosAsyncQueryImplWithReqid(uint64_t connId, const char* sql, __taos_async_

|

|||

doAsyncQuery(pRequest, false);

|

||||

}

|

||||

|

||||

TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly) {

|

||||

TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly, int8_t source) {

|

||||

if (NULL == taos) {

|

||||

terrno = TSDB_CODE_TSC_DISCONNECTED;

|

||||

return NULL;

|

||||

|

|

@ -2550,7 +2551,7 @@ TAOS_RES* taosQueryImpl(TAOS* taos, const char* sql, bool validateOnly) {

|

|||

}

|

||||

tsem_init(¶m->sem, 0, 0);

|

||||

|

||||

taosAsyncQueryImpl(*(int64_t*)taos, sql, syncQueryFn, param, validateOnly);

|

||||

taosAsyncQueryImpl(*(int64_t*)taos, sql, syncQueryFn, param, validateOnly, source);

|

||||

tsem_wait(¶m->sem);

|

||||

|

||||

SRequestObj* pRequest = NULL;

|

||||

|

|

@ -2615,7 +2616,7 @@ static void fetchCallback(void* pResult, void* param, int32_t code) {

|

|||

}

|

||||

|

||||

pRequest->code =

|

||||

setQueryResultFromRsp(pResultInfo, (const SRetrieveTableRsp*)pResultInfo->pData, pResultInfo->convertUcs4, true);

|

||||

setQueryResultFromRsp(pResultInfo, (const SRetrieveTableRsp*)pResultInfo->pData, pResultInfo->convertUcs4);

|

||||

if (pRequest->code != TSDB_CODE_SUCCESS) {

|

||||

pResultInfo->numOfRows = 0;

|

||||

pRequest->code = code;

|

||||

|

|

|

|||

|

|

@ -350,7 +350,6 @@ void taos_free_result(TAOS_RES *res) {

|

|||

taosArrayDestroy(pRsp->rsp.createTableLen);

|

||||

taosArrayDestroyP(pRsp->rsp.createTableReq, taosMemoryFree);

|

||||

|

||||

pRsp->resInfo.pRspMsg = NULL;

|

||||

doFreeReqResultInfo(&pRsp->resInfo);

|

||||

taosMemoryFree(pRsp);

|

||||

} else if (TD_RES_TMQ(res)) {

|

||||

|

|

@ -359,7 +358,6 @@ void taos_free_result(TAOS_RES *res) {

|

|||

taosArrayDestroy(pRsp->rsp.blockDataLen);

|

||||

taosArrayDestroyP(pRsp->rsp.blockTbName, taosMemoryFree);

|

||||

taosArrayDestroyP(pRsp->rsp.blockSchema, (FDelete)tDeleteSchemaWrapper);

|

||||

pRsp->resInfo.pRspMsg = NULL;

|

||||

doFreeReqResultInfo(&pRsp->resInfo);

|

||||

taosMemoryFree(pRsp);

|

||||

} else if (TD_RES_TMQ_META(res)) {

|

||||

|

|

@ -402,7 +400,7 @@ TAOS_FIELD *taos_fetch_fields(TAOS_RES *res) {

|

|||

return pResInfo->userFields;

|

||||

}

|

||||

|

||||

TAOS_RES *taos_query(TAOS *taos, const char *sql) { return taosQueryImpl(taos, sql, false); }

|

||||

TAOS_RES *taos_query(TAOS *taos, const char *sql) { return taosQueryImpl(taos, sql, false, TD_REQ_FROM_APP); }

|

||||

TAOS_RES *taos_query_with_reqid(TAOS *taos, const char *sql, int64_t reqid) {

|

||||

return taosQueryImplWithReqid(taos, sql, false, reqid);

|

||||

}

|

||||

|

|

@ -828,7 +826,7 @@ int *taos_get_column_data_offset(TAOS_RES *res, int columnIndex) {

|

|||

}

|

||||

|

||||

int taos_validate_sql(TAOS *taos, const char *sql) {

|

||||

TAOS_RES *pObj = taosQueryImpl(taos, sql, true);

|

||||

TAOS_RES *pObj = taosQueryImpl(taos, sql, true, TD_REQ_FROM_APP);

|

||||

|

||||

int code = taos_errno(pObj);

|

||||

|

||||

|

|

@ -1126,7 +1124,7 @@ void continueInsertFromCsv(SSqlCallbackWrapper *pWrapper, SRequestObj *pRequest)

|

|||

void taos_query_a(TAOS *taos, const char *sql, __taos_async_fn_t fp, void *param) {

|

||||

int64_t connId = *(int64_t *)taos;

|

||||

tscDebug("taos_query_a start with sql:%s", sql);

|

||||

taosAsyncQueryImpl(connId, sql, fp, param, false);

|

||||

taosAsyncQueryImpl(connId, sql, fp, param, false, TD_REQ_FROM_APP);

|

||||

tscDebug("taos_query_a end with sql:%s", sql);

|

||||

}

|

||||

|

||||

|

|

@ -1254,54 +1252,34 @@ void doAsyncQuery(SRequestObj *pRequest, bool updateMetaForce) {

|

|||

}

|

||||

|

||||

void restartAsyncQuery(SRequestObj *pRequest, int32_t code) {

|

||||

int32_t reqIdx = 0;

|

||||

SRequestObj *pReqList[16] = {NULL};

|

||||

SRequestObj *pUserReq = NULL;

|

||||

pReqList[0] = pRequest;

|

||||

uint64_t tmpRefId = 0;

|

||||

SRequestObj *pTmp = pRequest;

|

||||

while (pTmp->relation.prevRefId) {

|

||||

tmpRefId = pTmp->relation.prevRefId;

|

||||

pTmp = acquireRequest(tmpRefId);

|

||||

if (pTmp) {

|

||||

pReqList[++reqIdx] = pTmp;

|

||||

releaseRequest(tmpRefId);

|

||||

} else {

|

||||

tscError("prev req ref 0x%" PRIx64 " is not there", tmpRefId);

|

||||

tscInfo("restart request: %s p: %p", pRequest->sqlstr, pRequest);

|

||||

SRequestObj* pUserReq = pRequest;

|

||||

acquireRequest(pRequest->self);

|

||||

while (pUserReq) {

|

||||

if (pUserReq->self == pUserReq->relation.userRefId || pUserReq->relation.userRefId == 0) {

|

||||

break;

|

||||

}

|

||||

}

|

||||

|

||||

tmpRefId = pRequest->relation.nextRefId;

|

||||

while (tmpRefId) {

|

||||

pTmp = acquireRequest(tmpRefId);

|

||||

if (pTmp) {

|

||||

tmpRefId = pTmp->relation.nextRefId;

|

||||

removeRequest(pTmp->self);

|

||||

releaseRequest(pTmp->self);

|

||||

} else {

|

||||

tscError("next req ref 0x%" PRIx64 " is not there", tmpRefId);

|

||||

break;

|

||||

int64_t nextRefId = pUserReq->relation.nextRefId;

|

||||

releaseRequest(pUserReq->self);

|

||||

if (nextRefId) {

|

||||

pUserReq = acquireRequest(nextRefId);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

for (int32_t i = reqIdx; i >= 0; i--) {

|

||||

destroyCtxInRequest(pReqList[i]);

|

||||

if (pReqList[i]->relation.userRefId == pReqList[i]->self || 0 == pReqList[i]->relation.userRefId) {

|

||||

pUserReq = pReqList[i];

|

||||

} else {

|

||||

removeRequest(pReqList[i]->self);

|

||||

}

|

||||

}

|

||||

|

||||

bool hasSubRequest = pUserReq != pRequest || pRequest->relation.prevRefId != 0;

|

||||

if (pUserReq) {

|

||||

destroyCtxInRequest(pUserReq);

|

||||

pUserReq->prevCode = code;

|

||||

memset(&pUserReq->relation, 0, sizeof(pUserReq->relation));

|

||||

} else {

|

||||

tscError("user req is missing");

|

||||

tscError("User req is missing");

|

||||

removeFromMostPrevReq(pRequest);

|

||||

return;

|

||||

}

|

||||

|

||||

if (hasSubRequest)

|

||||

removeFromMostPrevReq(pRequest);

|

||||

else

|

||||

releaseRequest(pUserReq->self);

|

||||

doAsyncQuery(pUserReq, true);

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -538,7 +538,7 @@ int32_t processShowVariablesRsp(void* param, SDataBuf* pMsg, int32_t code) {

|

|||

code = buildShowVariablesRsp(rsp.variables, &pRes);

|

||||

}

|

||||

if (TSDB_CODE_SUCCESS == code) {

|

||||

code = setQueryResultFromRsp(&pRequest->body.resInfo, pRes, false, true);

|

||||

code = setQueryResultFromRsp(&pRequest->body.resInfo, pRes, false);

|

||||

}

|

||||

|

||||

if (code != 0) {

|

||||

|

|

@ -651,7 +651,7 @@ int32_t processCompactDbRsp(void* param, SDataBuf* pMsg, int32_t code) {

|

|||

code = buildRetriveTableRspForCompactDb(&rsp, &pRes);

|

||||

}

|

||||

if (TSDB_CODE_SUCCESS == code) {

|

||||

code = setQueryResultFromRsp(&pRequest->body.resInfo, pRes, false, true);

|

||||

code = setQueryResultFromRsp(&pRequest->body.resInfo, pRes, false);

|

||||

}

|

||||

|

||||

if (code != 0) {

|

||||

|

|

|

|||

|

|

@ -1256,7 +1256,7 @@ static int32_t taosDeleteData(TAOS* taos, void* meta, int32_t metaLen) {

|

|||

snprintf(sql, sizeof(sql), "delete from `%s` where `%s` >= %" PRId64 " and `%s` <= %" PRId64, req.tableFName,

|

||||

req.tsColName, req.skey, req.tsColName, req.ekey);

|

||||

|

||||

TAOS_RES* res = taos_query(taos, sql);

|

||||

TAOS_RES* res = taosQueryImpl(taos, sql, false, TD_REQ_FROM_TAOX);

|

||||

SRequestObj* pRequest = (SRequestObj*)res;

|

||||

code = pRequest->code;

|

||||

if (code == TSDB_CODE_PAR_TABLE_NOT_EXIST || code == TSDB_CODE_PAR_GET_META_ERROR) {

|

||||

|

|

|

|||

|

|

@ -1010,19 +1010,8 @@ int32_t tmq_unsubscribe(tmq_t* tmq) {

|

|||

}

|

||||

taosSsleep(2); // sleep 2s for hb to send offset and rows to server

|

||||

|

||||

int32_t rsp;

|

||||

int32_t retryCnt = 0;

|

||||

tmq_list_t* lst = tmq_list_new();

|

||||

while (1) {

|

||||

rsp = tmq_subscribe(tmq, lst);

|

||||

if (rsp != TSDB_CODE_MND_CONSUMER_NOT_READY || retryCnt > 5) {

|

||||

break;

|

||||

} else {

|

||||

retryCnt++;

|

||||

taosMsleep(500);

|

||||

}

|

||||

}

|

||||

|

||||

int32_t rsp = tmq_subscribe(tmq, lst);

|

||||

tmq_list_destroy(lst);

|

||||

return rsp;

|

||||

}

|

||||

|

|

@ -1272,10 +1261,9 @@ int32_t tmq_subscribe(tmq_t* tmq, const tmq_list_t* topic_list) {

|

|||

}

|

||||

|

||||

int32_t retryCnt = 0;

|

||||

while (syncAskEp(tmq) != 0) {

|

||||

if (retryCnt++ > MAX_RETRY_COUNT) {

|

||||

while ((code = syncAskEp(tmq)) != 0) {

|

||||

if (retryCnt++ > MAX_RETRY_COUNT || code == TSDB_CODE_MND_CONSUMER_NOT_EXIST) {

|

||||

tscError("consumer:0x%" PRIx64 ", mnd not ready for subscribe, retry more than 2 minutes", tmq->consumerId);

|

||||

code = TSDB_CODE_MND_CONSUMER_NOT_READY;

|

||||

goto FAIL;

|

||||

}

|

||||

|

||||

|

|

@ -2148,26 +2136,19 @@ int32_t tmq_consumer_close(tmq_t* tmq) {

|

|||

if (tmq->status == TMQ_CONSUMER_STATUS__READY) {

|

||||

// if auto commit is set, commit before close consumer. Otherwise, do nothing.

|

||||

if (tmq->autoCommit) {

|

||||

int32_t rsp = tmq_commit_sync(tmq, NULL);

|

||||

if (rsp != 0) {

|

||||

return rsp;

|

||||

int32_t code = tmq_commit_sync(tmq, NULL);

|

||||

if (code != 0) {

|

||||

return code;

|

||||

}

|

||||

}

|

||||

taosSsleep(2); // sleep 2s for hb to send offset and rows to server

|

||||

|

||||

int32_t retryCnt = 0;

|

||||

tmq_list_t* lst = tmq_list_new();

|

||||

while (1) {

|

||||

int32_t rsp = tmq_subscribe(tmq, lst);

|

||||

if (rsp != TSDB_CODE_MND_CONSUMER_NOT_READY || retryCnt > 5) {

|

||||

break;

|

||||

} else {

|

||||

retryCnt++;

|

||||

taosMsleep(500);

|

||||

}

|

||||

}

|

||||

|

||||

int32_t code = tmq_subscribe(tmq, lst);

|

||||

tmq_list_destroy(lst);

|

||||

if (code != 0) {

|

||||

return code;

|

||||

}

|

||||

} else {

|

||||

tscInfo("consumer:0x%" PRIx64 " not in ready state, close it directly", tmq->consumerId);

|

||||

}

|

||||

|

|

@ -2647,13 +2628,9 @@ SReqResultInfo* tmqGetNextResInfo(TAOS_RES* res, bool convertUcs4) {

|

|||

SRetrieveTableRspForTmq* pRetrieveTmq =

|

||||

(SRetrieveTableRspForTmq*)taosArrayGetP(pRspObj->rsp.blockData, pRspObj->resIter);

|

||||

if (pRspObj->rsp.withSchema) {

|

||||

doFreeReqResultInfo(&pRspObj->resInfo);

|

||||

SSchemaWrapper* pSW = (SSchemaWrapper*)taosArrayGetP(pRspObj->rsp.blockSchema, pRspObj->resIter);

|

||||

setResSchemaInfo(&pRspObj->resInfo, pSW->pSchema, pSW->nCols);

|

||||

taosMemoryFreeClear(pRspObj->resInfo.row);

|

||||

taosMemoryFreeClear(pRspObj->resInfo.pCol);

|

||||

taosMemoryFreeClear(pRspObj->resInfo.length);

|

||||

taosMemoryFreeClear(pRspObj->resInfo.convertBuf);

|

||||

taosMemoryFreeClear(pRspObj->resInfo.convertJson);

|

||||

}

|

||||

|

||||

pRspObj->resInfo.pData = (void*)pRetrieveTmq->data;

|

||||

|

|

|

|||

|

|

@ -58,7 +58,7 @@ int32_t tsNumOfMnodeQueryThreads = 4;

|

|||

int32_t tsNumOfMnodeFetchThreads = 1;

|

||||

int32_t tsNumOfMnodeReadThreads = 1;

|

||||

int32_t tsNumOfVnodeQueryThreads = 4;

|

||||

float tsRatioOfVnodeStreamThreads = 1.5F;

|

||||

float tsRatioOfVnodeStreamThreads = 0.5F;

|

||||

int32_t tsNumOfVnodeFetchThreads = 4;

|

||||

int32_t tsNumOfVnodeRsmaThreads = 2;

|

||||

int32_t tsNumOfQnodeQueryThreads = 4;

|

||||

|

|

@ -502,7 +502,7 @@ static int32_t taosAddClientCfg(SConfig *pCfg) {

|

|||

if (cfgAddInt32(pCfg, "maxRetryWaitTime", tsMaxRetryWaitTime, 0, 86400000, CFG_SCOPE_BOTH, CFG_DYN_CLIENT) != 0)

|

||||

return -1;

|

||||

if (cfgAddBool(pCfg, "useAdapter", tsUseAdapter, CFG_SCOPE_CLIENT, CFG_DYN_CLIENT) != 0) return -1;

|

||||

if (cfgAddBool(pCfg, "crashReporting", tsEnableCrashReport, CFG_SCOPE_CLIENT, CFG_DYN_CLIENT) != 0) return -1;

|

||||

if (cfgAddBool(pCfg, "crashReporting", tsEnableCrashReport, CFG_SCOPE_BOTH, CFG_DYN_CLIENT) != 0) return -1;

|

||||

if (cfgAddInt64(pCfg, "queryMaxConcurrentTables", tsQueryMaxConcurrentTables, INT64_MIN, INT64_MAX, CFG_SCOPE_CLIENT,

|

||||

CFG_DYN_NONE) != 0)

|

||||

return -1;

|

||||

|

|

@ -538,8 +538,8 @@ static int32_t taosAddClientCfg(SConfig *pCfg) {

|

|||

return -1;

|

||||

if (cfgAddBool(pCfg, "experimental", tsExperimental, CFG_SCOPE_BOTH, CFG_DYN_BOTH) != 0) return -1;

|

||||

|

||||

if (cfgAddBool(pCfg, "monitor", tsEnableMonitor, CFG_SCOPE_SERVER, CFG_DYN_SERVER) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "monitorInterval", tsMonitorInterval, 1, 200000, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddBool(pCfg, "monitor", tsEnableMonitor, CFG_SCOPE_BOTH, CFG_DYN_BOTH) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "monitorInterval", tsMonitorInterval, 1, 200000, CFG_SCOPE_BOTH, CFG_DYN_NONE) != 0) return -1;

|

||||

return 0;

|

||||

}

|

||||

|

||||

|

|

@ -586,7 +586,7 @@ static int32_t taosAddServerCfg(SConfig *pCfg) {

|

|||

|

||||

tsNumOfSupportVnodes = tsNumOfCores * 2;

|

||||

tsNumOfSupportVnodes = TMAX(tsNumOfSupportVnodes, 2);

|

||||

if (cfgAddInt32(pCfg, "supportVnodes", tsNumOfSupportVnodes, 0, 4096, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "supportVnodes", tsNumOfSupportVnodes, 0, 4096, CFG_SCOPE_SERVER, CFG_DYN_ENT_SERVER) != 0) return -1;

|

||||

|

||||

if (cfgAddInt32(pCfg, "statusInterval", tsStatusInterval, 1, 30, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "minSlidingTime", tsMinSlidingTime, 1, 1000000, CFG_SCOPE_CLIENT, CFG_DYN_CLIENT) != 0)

|

||||

|

|

@ -600,18 +600,6 @@ static int32_t taosAddServerCfg(SConfig *pCfg) {

|

|||

return -1;

|

||||

if (cfgAddInt32(pCfg, "queryRspPolicy", tsQueryRspPolicy, 0, 1, CFG_SCOPE_SERVER, CFG_DYN_ENT_SERVER) != 0) return -1;

|

||||

|

||||

tsNumOfRpcThreads = tsNumOfCores / 2;

|

||||

tsNumOfRpcThreads = TRANGE(tsNumOfRpcThreads, 2, TSDB_MAX_RPC_THREADS);

|

||||

if (cfgAddInt32(pCfg, "numOfRpcThreads", tsNumOfRpcThreads, 1, 1024, CFG_SCOPE_BOTH, CFG_DYN_NONE) != 0) return -1;

|

||||

|

||||

tsNumOfRpcSessions = TRANGE(tsNumOfRpcSessions, 100, 10000);

|

||||

if (cfgAddInt32(pCfg, "numOfRpcSessions", tsNumOfRpcSessions, 1, 100000, CFG_SCOPE_BOTH, CFG_DYN_NONE) != 0)

|

||||

return -1;

|

||||

|

||||

tsTimeToGetAvailableConn = TRANGE(tsTimeToGetAvailableConn, 20, 1000000);

|

||||

if (cfgAddInt32(pCfg, "timeToGetAvailableConn", tsNumOfRpcSessions, 20, 1000000, CFG_SCOPE_BOTH, CFG_DYN_NONE) != 0)

|

||||

return -1;

|

||||

|

||||

tsNumOfCommitThreads = tsNumOfCores / 2;

|

||||

tsNumOfCommitThreads = TRANGE(tsNumOfCommitThreads, 2, 4);

|

||||

if (cfgAddInt32(pCfg, "numOfCommitThreads", tsNumOfCommitThreads, 1, 1024, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0)

|

||||

|

|

@ -691,9 +679,6 @@ static int32_t taosAddServerCfg(SConfig *pCfg) {

|

|||

if (cfgAddInt32(pCfg, "grantMode", tsMndGrantMode, 0, 10000, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddBool(pCfg, "skipGrant", tsMndSkipGrant, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

|

||||

if (cfgAddBool(pCfg, "monitor", tsEnableMonitor, CFG_SCOPE_SERVER, CFG_DYN_SERVER) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "monitorInterval", tsMonitorInterval, 1, 200000, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0)

|

||||

return -1;

|

||||

if (cfgAddString(pCfg, "monitorFqdn", tsMonitorFqdn, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "monitorPort", tsMonitorPort, 1, 65056, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "monitorMaxLogs", tsMonitorMaxLogs, 1, 1000000, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

|

|

@ -707,7 +692,6 @@ static int32_t taosAddServerCfg(SConfig *pCfg) {

|

|||

if (cfgAddBool(pCfg, "auditCreateTable", tsEnableAuditCreateTable, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "auditInterval", tsAuditInterval, 500, 200000, CFG_SCOPE_SERVER, CFG_DYN_NONE) != 0) return -1;

|

||||

|

||||

if (cfgAddBool(pCfg, "crashReporting", tsEnableCrashReport, CFG_SCOPE_BOTH, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddBool(pCfg, "telemetryReporting", tsEnableTelem, CFG_SCOPE_BOTH, CFG_DYN_ENT_SERVER) != 0) return -1;

|

||||

if (cfgAddInt32(pCfg, "telemetryInterval", tsTelemInterval, 1, 200000, CFG_SCOPE_BOTH, CFG_DYN_NONE) != 0) return -1;

|

||||

if (cfgAddString(pCfg, "telemetryServer", tsTelemServer, CFG_SCOPE_BOTH, CFG_DYN_BOTH) != 0) return -1;

|

||||

|

|

@ -814,8 +798,6 @@ static int32_t taosAddServerCfg(SConfig *pCfg) {

|

|||

return -1;

|

||||

if (cfgAddBool(pCfg, "enableWhiteList", tsEnableWhiteList, CFG_SCOPE_SERVER, CFG_DYN_ENT_SERVER) != 0) return -1;

|

||||

|

||||

if (cfgAddBool(pCfg, "experimental", tsExperimental, CFG_SCOPE_BOTH, CFG_DYN_BOTH) != 0) return -1;

|

||||

|

||||

// GRANT_CFG_ADD;

|

||||

return 0;

|

||||

}

|

||||

|

|

@ -1353,6 +1335,7 @@ int32_t taosInitCfg(const char *cfgDir, const char **envCmd, const char *envFile

|

|||

if (taosAddClientLogCfg(tsCfg) != 0) return -1;

|

||||

if (taosAddServerLogCfg(tsCfg) != 0) return -1;

|

||||

}

|

||||

|

||||

taosAddSystemCfg(tsCfg);

|

||||

|

||||

if (taosLoadCfg(tsCfg, envCmd, cfgDir, envFile, apolloUrl) != 0) {

|

||||

|

|

@ -1379,7 +1362,9 @@ int32_t taosInitCfg(const char *cfgDir, const char **envCmd, const char *envFile

|

|||

if (taosSetTfsCfg(tsCfg) != 0) return -1;

|

||||

if (taosSetS3Cfg(tsCfg) != 0) return -1;

|

||||

}

|

||||

|

||||

taosSetSystemCfg(tsCfg);

|

||||

|

||||

if (taosSetFileHandlesLimit() != 0) return -1;

|

||||

|

||||

taosSetAllDebugFlag(tsCfg, cfgGetItem(tsCfg, "debugFlag")->i32);

|

||||

|

|

@ -1626,6 +1611,10 @@ static int32_t taosCfgDynamicOptionsForClient(SConfig *pCfg, char *name) {

|

|||

tsLogSpace.reserved = (int64_t)(((double)pItem->fval) * 1024 * 1024 * 1024);

|

||||

uInfo("%s set to %" PRId64, name, tsLogSpace.reserved);

|

||||

matched = true;

|

||||

} else if (strcasecmp("monitor", name) == 0) {

|

||||

tsEnableMonitor = pItem->bval;

|

||||

uInfo("%s set to %d", name, tsEnableMonitor);

|

||||

matched = true;

|

||||

}

|

||||

break;

|

||||

}

|

||||

|

|

|

|||

|

|

@ -7192,6 +7192,7 @@ int32_t tSerializeSVDeleteReq(void *buf, int32_t bufLen, SVDeleteReq *pReq) {

|

|||

if (tEncodeU32(&encoder, pReq->sqlLen) < 0) return -1;

|

||||

if (tEncodeCStr(&encoder, pReq->sql) < 0) return -1;

|

||||

if (tEncodeBinary(&encoder, pReq->msg, pReq->phyLen) < 0) return -1;

|

||||

if (tEncodeI8(&encoder, pReq->source) < 0) return -1;

|

||||

tEndEncode(&encoder);

|

||||

|

||||

int32_t tlen = encoder.pos;

|

||||

|

|

@ -7228,6 +7229,9 @@ int32_t tDeserializeSVDeleteReq(void *buf, int32_t bufLen, SVDeleteReq *pReq) {

|

|||

if (tDecodeBinaryAlloc(&decoder, (void **)&pReq->msg, &msgLen) < 0) return -1;

|

||||

pReq->phyLen = msgLen;

|

||||

|

||||

if (!tDecodeIsEnd(&decoder)) {

|

||||

if (tDecodeI8(&decoder, &pReq->source) < 0) return -1;

|

||||

}

|

||||

tEndDecode(&decoder);

|

||||

|

||||

tDecoderClear(&decoder);

|

||||

|

|

@ -8427,6 +8431,7 @@ int32_t tEncodeDeleteRes(SEncoder *pCoder, const SDeleteRes *pRes) {

|

|||

if (tEncodeCStr(pCoder, pRes->tableFName) < 0) return -1;

|

||||

if (tEncodeCStr(pCoder, pRes->tsColName) < 0) return -1;

|

||||

if (tEncodeI64(pCoder, pRes->ctimeMs) < 0) return -1;

|

||||

if (tEncodeI8(pCoder, pRes->source) < 0) return -1;

|

||||

return 0;

|

||||

}

|

||||

|

||||

|

|

@ -8451,6 +8456,9 @@ int32_t tDecodeDeleteRes(SDecoder *pCoder, SDeleteRes *pRes) {

|

|||

if (!tDecodeIsEnd(pCoder)) {

|

||||

if (tDecodeI64(pCoder, &pRes->ctimeMs) < 0) return -1;

|

||||

}

|

||||

if (!tDecodeIsEnd(pCoder)) {

|

||||

if (tDecodeI8(pCoder, &pRes->source) < 0) return -1;

|

||||

}

|

||||

return 0;

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -111,7 +111,7 @@ STrans *doCreateTrans(SMnode *pMnode, SStreamObj *pStream, SRpcMsg *pReq, const

|

|||

int32_t mndPersistTransLog(SStreamObj *pStream, STrans *pTrans, int32_t status);

|

||||

SSdbRaw *mndStreamActionEncode(SStreamObj *pStream);

|

||||

void killAllCheckpointTrans(SMnode *pMnode, SVgroupChangeInfo *pChangeInfo);

|

||||

int32_t mndStreamSetUpdateEpsetAction(SStreamObj *pStream, SVgroupChangeInfo *pInfo, STrans *pTrans);

|

||||

int32_t mndStreamSetUpdateEpsetAction(SMnode *pMnode, SStreamObj *pStream, SVgroupChangeInfo *pInfo, STrans *pTrans);

|

||||

|

||||

SStreamObj *mndGetStreamObj(SMnode *pMnode, int64_t streamId);

|

||||

int32_t extractNodeEpset(SMnode *pMnode, SEpSet *pEpSet, bool *hasEpset, int32_t taskId, int32_t nodeId);

|

||||

|

|

|

|||

|

|

@ -610,7 +610,7 @@ static int32_t mndProcessStatisReq(SRpcMsg *pReq) {

|

|||

for(int32_t j = 0; j < tagSize; j++){

|

||||

SJson* item = tjsonGetArrayItem(arrayTag, j);

|

||||

|

||||

*(labels + j) = taosMemoryMalloc(MONITOR_TAG_NAME_LEN);

|

||||

*(labels + j) = taosMemoryMalloc(MONITOR_TAG_NAME_LEN);

|

||||

tjsonGetStringValue(item, "name", *(labels + j));

|

||||

|

||||

*(sample_labels + j) = taosMemoryMalloc(MONITOR_TAG_VALUE_LEN);

|

||||

|

|

@ -626,7 +626,7 @@ static int32_t mndProcessStatisReq(SRpcMsg *pReq) {

|

|||

for(int32_t j = 0; j < metricLen; j++){

|

||||

SJson *item = tjsonGetArrayItem(metrics, j);

|

||||

|

||||

char name[MONITOR_METRIC_NAME_LEN] = {0};

|

||||

char name[MONITOR_METRIC_NAME_LEN] = {0};

|

||||

tjsonGetStringValue(item, "name", name);

|

||||

|

||||

double value = 0;

|

||||

|

|

@ -636,7 +636,7 @@ static int32_t mndProcessStatisReq(SRpcMsg *pReq) {

|

|||

tjsonGetDoubleValue(item, "type", &type);

|

||||

|

||||

int32_t metricNameLen = strlen(name) + strlen(tableName) + 2;

|

||||

char* metricName = taosMemoryMalloc(metricNameLen);

|

||||

char* metricName = taosMemoryMalloc(metricNameLen);

|

||||

memset(metricName, 0, metricNameLen);

|

||||

sprintf(metricName, "%s:%s", tableName, name);

|

||||

|

||||

|

|

@ -669,7 +669,7 @@ static int32_t mndProcessStatisReq(SRpcMsg *pReq) {

|

|||

else{

|

||||

mTrace("get metric from registry:%p", metric);

|

||||

}

|

||||

|

||||

|

||||

if(type == 0){

|

||||

taos_counter_add(metric, value, (const char**)sample_labels);

|

||||

}

|

||||

|

|

@ -689,7 +689,7 @@ static int32_t mndProcessStatisReq(SRpcMsg *pReq) {

|

|||

taosMemoryFreeClear(labels);

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

}

|

||||

|

||||

code = 0;

|

||||

|

|

@ -1409,24 +1409,6 @@ static int32_t mndProcessConfigDnodeReq(SRpcMsg *pReq) {

|

|||

if (strcasecmp(cfgReq.config, "resetlog") == 0) {

|

||||

strcpy(dcfgReq.config, "resetlog");

|

||||

#ifdef TD_ENTERPRISE

|

||||

} else if (strncasecmp(cfgReq.config, "supportvnodes", 13) == 0) {

|

||||

int32_t optLen = strlen("supportvnodes");

|

||||

int32_t flag = -1;

|

||||

int32_t code = mndMCfgGetValInt32(&cfgReq, optLen, &flag);

|

||||

if (code < 0) return code;

|

||||

|

||||

if (flag < 0 || flag > 4096) {

|

||||

mError("dnode:%d, failed to config supportVnodes since value:%d. Valid range: [0, 4096]", cfgReq.dnodeId, flag);

|

||||

terrno = TSDB_CODE_OUT_OF_RANGE;

|

||||

goto _err_out;

|

||||

}

|

||||

if (flag == 0) {

|

||||

flag = tsNumOfCores * 2;

|

||||

}

|

||||

flag = TMAX(flag, 2);

|

||||

|

||||

strcpy(dcfgReq.config, "supportvnodes");

|

||||

snprintf(dcfgReq.value, TSDB_DNODE_VALUE_LEN, "%d", flag);

|

||||

} else if (strncasecmp(cfgReq.config, "s3blocksize", 11) == 0) {

|

||||

int32_t optLen = strlen("s3blocksize");

|

||||

int32_t flag = -1;

|

||||

|

|

|

|||

|

|

@ -597,6 +597,7 @@ static int32_t mndSetUpdateIdxStbCommitLogs(SMnode *pMnode, STrans *pTrans, SStb

|

|||

taosRUnLockLatch(&pOld->lock);

|

||||

|

||||

pNew->pTags = NULL;

|

||||

pNew->pColumns = NULL;

|

||||

pNew->updateTime = taosGetTimestampMs();

|

||||

pNew->lock = 0;

|

||||

|

||||

|

|

|

|||

|

|

@ -721,6 +721,8 @@ static int32_t mndProcessCreateStreamReq(SRpcMsg *pReq) {

|

|||

goto _OVER;

|

||||

}

|

||||

|

||||

// add into buffer firstly

|

||||

// to make sure when the hb from vnode arrived, the newly created tasks have been in the task map already.

|

||||

taosThreadMutexLock(&execInfo.lock);

|

||||

mDebug("stream stream:%s tasks register into node list", createReq.name);

|

||||

saveStreamTasksInfo(&streamObj, &execInfo);

|

||||

|

|

@ -1811,7 +1813,7 @@ static int32_t mndProcessVgroupChange(SMnode *pMnode, SVgroupChangeInfo *pChange

|

|||

mDebug("stream:0x%" PRIx64 " %s involved node changed, create update trans, transId:%d", pStream->uid,

|

||||

pStream->name, pTrans->id);

|

||||

|

||||

int32_t code = mndStreamSetUpdateEpsetAction(pStream, pChangeInfo, pTrans);

|

||||

int32_t code = mndStreamSetUpdateEpsetAction(pMnode, pStream, pChangeInfo, pTrans);

|

||||

|

||||

// todo: not continue, drop all and retry again

|

||||

if (code != TSDB_CODE_SUCCESS) {

|

||||

|

|

|

|||

|

|

@ -115,6 +115,7 @@ SArray *mndTakeVgroupSnapshot(SMnode *pMnode, bool *allReady) {

|

|||

|

||||

char buf[256] = {0};

|

||||

EPSET_TO_STR(&entry.epset, buf);

|

||||

|

||||

mDebug("take node snapshot, nodeId:%d %s", entry.nodeId, buf);

|

||||

taosArrayPush(pVgroupListSnapshot, &entry);

|

||||

sdbRelease(pSdb, pVgroup);

|

||||

|

|

@ -300,7 +301,10 @@ static int32_t doSetPauseAction(SMnode *pMnode, STrans *pTrans, SStreamTask *pTa

|

|||

return code;

|

||||

}

|

||||

|

||||

mDebug("pause node:%d, epset:%d", pTask->info.nodeId, epset.numOfEps);

|

||||

char buf[256] = {0};

|

||||

EPSET_TO_STR(&epset, buf);

|

||||

mDebug("pause stream task in node:%d, epset:%s", pTask->info.nodeId, buf);

|

||||

|

||||

code = setTransAction(pTrans, pReq, sizeof(SVPauseStreamTaskReq), TDMT_STREAM_TASK_PAUSE, &epset, 0);

|

||||

if (code != 0) {

|

||||

taosMemoryFree(pReq);

|

||||

|

|

@ -462,14 +466,22 @@ static int32_t doBuildStreamTaskUpdateMsg(void **pBuf, int32_t *pLen, SVgroupCha

|

|||

return TSDB_CODE_SUCCESS;

|

||||

}

|

||||

|

||||

static int32_t doSetUpdateTaskAction(STrans *pTrans, SStreamTask *pTask, SVgroupChangeInfo *pInfo) {

|

||||

static int32_t doSetUpdateTaskAction(SMnode *pMnode, STrans *pTrans, SStreamTask *pTask, SVgroupChangeInfo *pInfo) {

|

||||

void *pBuf = NULL;

|

||||

int32_t len = 0;

|

||||

streamTaskUpdateEpsetInfo(pTask, pInfo->pUpdateNodeList);

|

||||

|

||||

doBuildStreamTaskUpdateMsg(&pBuf, &len, pInfo, pTask->info.nodeId, &pTask->id, pTrans->id);

|

||||

|

||||

int32_t code = setTransAction(pTrans, pBuf, len, TDMT_VND_STREAM_TASK_UPDATE, &pTask->info.epSet, 0);

|

||||

SEpSet epset = {0};

|

||||

bool hasEpset = false;

|

||||

int32_t code = extractNodeEpset(pMnode, &epset, &hasEpset, pTask->id.taskId, pTask->info.nodeId);

|

||||

if (code != TSDB_CODE_SUCCESS || !hasEpset) {

|

||||

terrno = code;

|

||||

return code;

|

||||

}

|

||||

|

||||

code = setTransAction(pTrans, pBuf, len, TDMT_VND_STREAM_TASK_UPDATE, &epset, TSDB_CODE_VND_INVALID_VGROUP_ID);

|

||||

if (code != TSDB_CODE_SUCCESS) {

|

||||

taosMemoryFree(pBuf);

|

||||

}

|

||||

|

|

@ -478,14 +490,14 @@ static int32_t doSetUpdateTaskAction(STrans *pTrans, SStreamTask *pTask, SVgroup

|

|||

}

|

||||

|

||||

// build trans to update the epset

|

||||

int32_t mndStreamSetUpdateEpsetAction(SStreamObj *pStream, SVgroupChangeInfo *pInfo, STrans *pTrans) {

|

||||

int32_t mndStreamSetUpdateEpsetAction(SMnode *pMnode, SStreamObj *pStream, SVgroupChangeInfo *pInfo, STrans *pTrans) {

|

||||

mDebug("stream:0x%" PRIx64 " set tasks epset update action", pStream->uid);

|

||||

taosWLockLatch(&pStream->lock);

|

||||

|

||||

SStreamTaskIter *pIter = createStreamTaskIter(pStream);

|

||||

while (streamTaskIterNextTask(pIter)) {

|

||||

SStreamTask *pTask = streamTaskIterGetCurrent(pIter);

|

||||

int32_t code = doSetUpdateTaskAction(pTrans, pTask, pInfo);

|

||||

int32_t code = doSetUpdateTaskAction(pMnode, pTrans, pTask, pInfo);

|

||||

if (code != TSDB_CODE_SUCCESS) {

|

||||

destroyStreamTaskIter(pIter);

|

||||

taosWUnLockLatch(&pStream->lock);

|

||||

|

|

|

|||

|

|

@ -15,11 +15,11 @@

|

|||

|

||||

#define _DEFAULT_SOURCE

|

||||

#include "mndTrans.h"

|

||||

#include "mndSubscribe.h"

|

||||

#include "mndDb.h"

|

||||

#include "mndPrivilege.h"

|

||||

#include "mndShow.h"

|

||||

#include "mndStb.h"

|

||||

#include "mndSubscribe.h"

|

||||

#include "mndSync.h"

|

||||

#include "mndUser.h"

|

||||

|

||||

|

|

@ -801,16 +801,17 @@ static bool mndCheckTransConflict(SMnode *pMnode, STrans *pNew) {

|

|||

if (pNew->conflict == TRN_CONFLICT_TOPIC) {

|

||||

if (pTrans->conflict == TRN_CONFLICT_GLOBAL) conflict = true;

|

||||

if (pTrans->conflict == TRN_CONFLICT_TOPIC || pTrans->conflict == TRN_CONFLICT_TOPIC_INSIDE) {

|

||||

if (strcasecmp(pNew->dbname, pTrans->dbname) == 0 ) conflict = true;

|

||||

if (strcasecmp(pNew->dbname, pTrans->dbname) == 0) conflict = true;

|

||||

}

|

||||

}

|

||||

if (pNew->conflict == TRN_CONFLICT_TOPIC_INSIDE) {

|

||||

if (pTrans->conflict == TRN_CONFLICT_GLOBAL) conflict = true;

|

||||

if (pTrans->conflict == TRN_CONFLICT_TOPIC ) {

|

||||

if (strcasecmp(pNew->dbname, pTrans->dbname) == 0 ) conflict = true;

|

||||

if (pTrans->conflict == TRN_CONFLICT_TOPIC) {

|

||||

if (strcasecmp(pNew->dbname, pTrans->dbname) == 0) conflict = true;

|

||||

}

|

||||

if (pTrans->conflict == TRN_CONFLICT_TOPIC_INSIDE) {

|

||||

if (strcasecmp(pNew->dbname, pTrans->dbname) == 0 && strcasecmp(pNew->stbname, pTrans->stbname) == 0) conflict = true;

|

||||

if (strcasecmp(pNew->dbname, pTrans->dbname) == 0 && strcasecmp(pNew->stbname, pTrans->stbname) == 0)

|

||||

conflict = true;

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -847,7 +848,7 @@ int32_t mndTransCheckConflict(SMnode *pMnode, STrans *pTrans) {

|

|||

}

|

||||

|

||||

int32_t mndTransPrepare(SMnode *pMnode, STrans *pTrans) {

|

||||

if(pTrans == NULL) return -1;

|

||||

if (pTrans == NULL) return -1;

|

||||

|

||||

if (mndTransCheckConflict(pMnode, pTrans) != 0) {

|

||||

return -1;

|

||||

|

|

@ -1142,6 +1143,7 @@ static int32_t mndTransSendSingleMsg(SMnode *pMnode, STrans *pTrans, STransActio

|

|||

return -1;

|

||||

}

|

||||

rpcMsg.info.traceId.rootId = pTrans->mTraceId;

|

||||

rpcMsg.info.notFreeAhandle = 1;

|

||||

|

||||

memcpy(rpcMsg.pCont, pAction->pCont, pAction->contLen);

|

||||

|

||||

|

|

@ -1156,7 +1158,7 @@ static int32_t mndTransSendSingleMsg(SMnode *pMnode, STrans *pTrans, STransActio

|

|||

int32_t code = tmsgSendReq(&pAction->epSet, &rpcMsg);

|

||||

if (code == 0) {

|

||||

pAction->msgSent = 1;

|

||||

//pAction->msgReceived = 0;

|

||||

// pAction->msgReceived = 0;

|

||||

pAction->errCode = TSDB_CODE_ACTION_IN_PROGRESS;

|

||||

mInfo("trans:%d, %s:%d is sent, %s", pTrans->id, mndTransStr(pAction->stage), pAction->id, detail);

|

||||

|

||||

|

|

@ -1253,16 +1255,16 @@ static int32_t mndTransExecuteActions(SMnode *pMnode, STrans *pTrans, SArray *pA

|

|||

|

||||

for (int32_t action = 0; action < numOfActions; ++action) {

|

||||

STransAction *pAction = taosArrayGet(pArray, action);

|

||||

mDebug("trans:%d, %s:%d Sent:%d, Received:%d, errCode:0x%x, acceptableCode:0x%x, retryCode:0x%x",

|

||||

pTrans->id, mndTransStr(pAction->stage), pAction->id, pAction->msgSent, pAction->msgReceived,

|

||||

pAction->errCode, pAction->acceptableCode, pAction->retryCode);

|

||||

mDebug("trans:%d, %s:%d Sent:%d, Received:%d, errCode:0x%x, acceptableCode:0x%x, retryCode:0x%x", pTrans->id,

|

||||

mndTransStr(pAction->stage), pAction->id, pAction->msgSent, pAction->msgReceived, pAction->errCode,

|

||||

pAction->acceptableCode, pAction->retryCode);

|

||||

if (pAction->msgSent) {

|

||||

if (pAction->msgReceived) {

|

||||

if (pAction->errCode != 0 && pAction->errCode != pAction->acceptableCode) {

|

||||

mndTransResetAction(pMnode, pTrans, pAction);

|

||||

mInfo("trans:%d, %s:%d reset", pTrans->id, mndTransStr(pAction->stage), pAction->id);

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

return TSDB_CODE_ACTION_IN_PROGRESS;

|

||||

|

|

|

|||

|

|

@ -106,7 +106,7 @@ typedef struct SQueryNode SQueryNode;

|

|||

#define VND_INFO_FNAME "vnode.json"

|

||||

#define VND_INFO_FNAME_TMP "vnode_tmp.json"

|

||||

|

||||

#define VNODE_METRIC_SQL_COUNT "taos_sql_req:count"

|

||||

#define VNODE_METRIC_SQL_COUNT "taosd_sql_req:count"

|

||||

|

||||

#define VNODE_METRIC_TAG_NAME_SQL_TYPE "sql_type"

|

||||

#define VNODE_METRIC_TAG_NAME_CLUSTER_ID "cluster_id"

|

||||

|

|

|

|||

|

|

@ -917,6 +917,22 @@ static void doStartFillhistoryStep2(SStreamTask* pTask, SStreamTask* pStreamTask

|

|||

}

|

||||

}

|

||||

|

||||

int32_t handleStep2Async(SStreamTask* pStreamTask, void* param) {

|

||||

STQ* pTq = param;

|

||||

|

||||

SStreamMeta* pMeta = pStreamTask->pMeta;

|

||||

STaskId hId = pStreamTask->hTaskInfo.id;

|

||||

SStreamTask* pTask = streamMetaAcquireTask(pStreamTask->pMeta, hId.streamId, hId.taskId);

|

||||

if (pTask == NULL) {

|

||||

// todo handle error

|

||||

}

|

||||

|

||||

doStartFillhistoryStep2(pTask, pStreamTask, pTq);

|

||||

|

||||

streamMetaReleaseTask(pMeta, pTask);

|

||||

return 0;

|

||||

}

|

||||

|

||||

// this function should be executed by only one thread, so we set an sentinel to protect this function

|

||||

int32_t tqProcessTaskScanHistory(STQ* pTq, SRpcMsg* pMsg) {

|

||||

SStreamScanHistoryReq* pReq = (SStreamScanHistoryReq*)pMsg->pCont;

|

||||

|

|

@ -1007,37 +1023,27 @@ int32_t tqProcessTaskScanHistory(STQ* pTq, SRpcMsg* pMsg) {

|

|||

// the following procedure should be executed, no matter status is stop/pause or not

|

||||

tqDebug("s-task:%s scan-history(step 1) ended, elapsed time:%.2fs", id, pTask->execInfo.step1El);

|

||||

|

||||

if (pTask->info.fillHistory) {

|

||||

SStreamTask* pStreamTask = NULL;

|

||||

ASSERT(pTask->info.fillHistory == 1);

|

||||

|

||||

// 1. get the related stream task

|

||||

pStreamTask = streamMetaAcquireTask(pMeta, pTask->streamTaskId.streamId, pTask->streamTaskId.taskId);

|

||||

if (pStreamTask == NULL) {

|

||||

tqError("failed to find s-task:0x%" PRIx64 ", it may have been destroyed, drop related fill-history task:%s",

|

||||

pTask->streamTaskId.taskId, pTask->id.idStr);

|

||||

// 1. get the related stream task

|

||||

SStreamTask* pStreamTask = streamMetaAcquireTask(pMeta, pTask->streamTaskId.streamId, pTask->streamTaskId.taskId);

|

||||

if (pStreamTask == NULL) {

|

||||

tqError("failed to find s-task:0x%" PRIx64 ", it may have been destroyed, drop related fill-history task:%s",

|

||||

pTask->streamTaskId.taskId, pTask->id.idStr);

|

||||

|

||||

tqDebug("s-task:%s fill-history task set status to be dropping and drop it", id);

|

||||

streamBuildAndSendDropTaskMsg(pTask->pMsgCb, pMeta->vgId, &pTask->id, 0);

|

||||

|

||||

atomic_store_32(&pTask->status.inScanHistorySentinel, 0);

|

||||

streamMetaReleaseTask(pMeta, pTask);

|

||||

return -1;

|

||||

}

|

||||

|

||||

ASSERT(pStreamTask->info.taskLevel == TASK_LEVEL__SOURCE);

|

||||

|

||||

code = streamTaskHandleEvent(pStreamTask->status.pSM, TASK_EVENT_HALT);

|

||||

if (code == TSDB_CODE_SUCCESS) {

|

||||

doStartFillhistoryStep2(pTask, pStreamTask, pTq);

|

||||

} else {

|

||||

tqError("s-task:%s failed to halt s-task:%s, not launch step2", id, pStreamTask->id.idStr);

|

||||

}

|

||||

|

||||

streamMetaReleaseTask(pMeta, pStreamTask);

|

||||

} else {

|

||||

ASSERT(0);

|

||||

atomic_store_32(&pTask->status.inScanHistorySentinel, 0);

|

||||

streamMetaReleaseTask(pMeta, pTask);

|

||||

return -1;

|

||||

}

|

||||

|

||||

ASSERT(pStreamTask->info.taskLevel == TASK_LEVEL__SOURCE);

|

||||

code = streamTaskHandleEventAsync(pStreamTask->status.pSM, TASK_EVENT_HALT, handleStep2Async, pTq);

|

||||

|

||||

streamMetaReleaseTask(pMeta, pStreamTask);

|

||||

|

||||

atomic_store_32(&pTask->status.inScanHistorySentinel, 0);

|

||||

streamMetaReleaseTask(pMeta, pTask);

|

||||

return code;

|

||||

|

|

|

|||

|

|

@ -368,24 +368,11 @@ int32_t extractMsgFromWal(SWalReader* pReader, void** pItem, int64_t maxVer, con

|

|||

}

|

||||

}

|

||||

|

||||

// todo ignore the error in wal?

|

||||

bool tqNextBlockInWal(STqReader* pReader, const char* id, int sourceExcluded) {

|

||||

SWalReader* pWalReader = pReader->pWalReader;

|

||||

SSDataBlock* pDataBlock = NULL;

|

||||

|

||||

uint64_t st = taosGetTimestampMs();

|

||||

while (1) {

|

||||

// try next message in wal file

|

||||

if (walNextValidMsg(pWalReader) < 0) {

|

||||

return false;

|

||||

}

|

||||

|

||||

void* pBody = POINTER_SHIFT(pWalReader->pHead->head.body, sizeof(SSubmitReq2Msg));

|

||||

int32_t bodyLen = pWalReader->pHead->head.bodyLen - sizeof(SSubmitReq2Msg);

|

||||

int64_t ver = pWalReader->pHead->head.version;

|

||||

|

||||

tqReaderSetSubmitMsg(pReader, pBody, bodyLen, ver);

|

||||

pReader->nextBlk = 0;

|

||||

int32_t numOfBlocks = taosArrayGetSize(pReader->submit.aSubmitTbData);

|

||||

while (pReader->nextBlk < numOfBlocks) {

|

||||

tqTrace("tq reader next data block %d/%d, len:%d %" PRId64, pReader->nextBlk, numOfBlocks, pReader->msg.msgLen,

|

||||

|

|

@ -400,33 +387,32 @@ bool tqNextBlockInWal(STqReader* pReader, const char* id, int sourceExcluded) {

|

|||

tqTrace("tq reader return submit block, uid:%" PRId64, pSubmitTbData->uid);

|

||||

SSDataBlock* pRes = NULL;

|

||||

int32_t code = tqRetrieveDataBlock(pReader, &pRes, NULL);

|

||||

if (code == TSDB_CODE_SUCCESS && pRes->info.rows > 0) {

|

||||

if (pDataBlock == NULL) {

|

||||

pDataBlock = createOneDataBlock(pRes, true);

|

||||

} else {

|

||||

blockDataMerge(pDataBlock, pRes);

|

||||

}

|

||||

if (code == TSDB_CODE_SUCCESS) {

|

||||

return true;

|

||||

}

|

||||

} else {

|

||||

pReader->nextBlk += 1;

|

||||

tqTrace("tq reader discard submit block, uid:%" PRId64 ", continue", pSubmitTbData->uid);

|

||||

}

|

||||

}

|

||||

|

||||

tDestroySubmitReq(&pReader->submit, TSDB_MSG_FLG_DECODE);

|

||||

pReader->msg.msgStr = NULL;

|

||||

|

||||

if (pDataBlock != NULL) {

|

||||

blockDataCleanup(pReader->pResBlock);

|

||||

copyDataBlock(pReader->pResBlock, pDataBlock);

|

||||

blockDataDestroy(pDataBlock);

|

||||

return true;

|

||||

} else {

|

||||

qTrace("stream scan return empty, all %d submit blocks consumed, %s", numOfBlocks, id);

|

||||

}

|

||||

|

||||

if (taosGetTimestampMs() - st > 1000) {

|

||||

return false;

|

||||

}

|

||||

|

||||

// try next message in wal file

|

||||

if (walNextValidMsg(pWalReader) < 0) {

|

||||

return false;

|

||||

}

|

||||

|

||||

void* pBody = POINTER_SHIFT(pWalReader->pHead->head.body, sizeof(SSubmitReq2Msg));

|

||||

int32_t bodyLen = pWalReader->pHead->head.bodyLen - sizeof(SSubmitReq2Msg);

|

||||

int64_t ver = pWalReader->pHead->head.version;

|

||||

tqReaderSetSubmitMsg(pReader, pBody, bodyLen, ver);

|

||||

pReader->nextBlk = 0;

|

||||

}

|

||||

}

|

||||

|

||||

|

|

|